Self-clocking signal: Difference between revisions

en>Jakuzem m →Implementations: isochronous - anisochronous |

en>Dsimic →See also: Minor cleanups |

||

| Line 1: | Line 1: | ||

{{Quantum field theory|cTopic=Tools}} | |||

{{Renormalization and regularization}} | |||

In [[quantum field theory]], the [[statistical mechanics]] of fields, and the theory of [[self-similarity|self-similar]] geometric structures, '''renormalization''' is any of a collection of techniques used to treat [[infinity|infinities]] arising in calculated quantities. | |||

When describing space and time as a [[continuum]], certain statistical and quantum mechanical constructions are ill defined. To define them, the [[continuum limit]] has to be taken carefully. | |||

Renormalization establishes a relationship between parameters in the theory, when the parameters describing large distance scales differ from the parameters describing small distances. Renormalization was first developed in [[quantum electrodynamics]] (QED) to make sense of [[infinity|infinite]] integrals in [[perturbation theory]]. Initially viewed as a suspicious provisional procedure even by some of its originators, renormalization eventually was embraced as an important and [[self-consistent]] tool in several fields of [[physics]] and [[mathematics]]. | |||

== Self-interactions in classical physics == | |||

[[Image:Renormalized-vertex.png|thumbnail|300px|Figure 1. Renormalization in quantum electrodynamics: The simple electron-photon interaction that determines the electron's charge at one renormalization point is revealed to consist of more complicated interactions at another.]] | |||

The problem of infinities first arose in the [[classical electrodynamics]] of [[Elementary particle|point particle]]s in the 19th and early 20th century. | |||

The mass of a charged particle should include the mass-energy in its electrostatic field ([[Electromagnetic mass]]). Assume that the particle is a charged spherical shell of radius <math>r_e</math>. The mass-energy in the field is | |||

:<math> | |||

m_\mathrm{em} = \int {1\over 2}E^2 \, dV = \int\limits_{r_e}^\infty \frac{1}{2} \left( {q\over 4\pi r^2} \right)^2 4\pi r^2 \, dr = {q^2 \over 8\pi r_e}, | |||

</math> | |||

which becomes infinite in the limit as <math>r_e</math> approaches zero. This implies that the point particle would have infinite [[inertia]], making it unable to be accelerated. Incidentally, the value of <math>r_e</math> that makes <math>m_{\mathrm{em}}</math> equal to the electron mass is called the [[classical electron radius]], which (setting <math>q = e</math> and restoring factors of <math>c</math> and <math>\varepsilon_0</math>) turns out to be | |||

:<math>r_e = {e^2 \over 4\pi\varepsilon_0 m_{\mathrm{e}} c^2} = \alpha {\hbar\over m_{\mathrm{e}} c} \approx 2.8 \times 10^{-15}\ \mathrm{m}.</math> | |||

where <math>\alpha \approx 1/137</math> is the [[fine structure constant]], and <math>\hbar/m_{\mathrm{e}} c</math> is the [[Compton wavelength]] of the electron. | |||

The total effective mass of a spherical charged particle includes the actual bare mass of the spherical shell (in addition to the aforementioned mass associated with its electric field). If the shell's bare mass is allowed to be negative, it might be possible to take a consistent point limit.<ref>http://www.ingentaconnect.com/content/klu/math/1996/00000037/00000004/00091778</ref> This was called ''renormalization'', and [[Lorentz]] and [[Max Abraham|Abraham]] attempted to develop a classical theory of the electron this way. This early work was the inspiration for later attempts at [[regularization (physics)|regularization]] and renormalization in quantum field theory. | |||

When calculating the [[electromagnetism|electromagnetic]] interactions of [[electric charge|charged]] particles, it is tempting to ignore the ''[[back-reaction]]'' of a particle's own field on itself. But this back reaction is necessary to explain the friction on charged particles when they emit radiation. If the electron is assumed to be a point, the value of the back-reaction diverges, for the same reason that the mass diverges, because the field is [[inverse-square law|inverse-square]]. | |||

The [[Abraham–Lorentz force|Abraham–Lorentz theory]] had a noncausal "pre-acceleration". Sometimes an electron would start moving ''before'' the force is applied. This is a sign that the point limit is inconsistent. An extended body will start moving when a force is applied within one radius of the center of mass. | |||

The trouble was worse in classical field theory than in quantum field theory, because in quantum field theory a charged particle experiences [[Zitterbewegung]] due to interference with virtual particle-antiparticle pairs, thus effectively smearing out the charge over a region comparable to the Compton wavelength. In quantum electrodynamics at small coupling the electromagnetic mass only diverges as the logarithm of the radius of the particle. | |||

== Divergences in quantum electrodynamics == | |||

{{Unreferenced section|date=December 2009}} | |||

[[Image:Loop-diagram.png|thumbnail|250px|Figure 2. A diagram contributing to electron-electron scattering in QED. The loop has an ultraviolet divergence.]] | |||

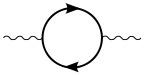

[[Image:vacuum polarization.svg|frame|Vacuum polarization, a.k.a. charge screening. This loop has a logarithmic ultraviolet divergence.]] | |||

[[Image:selfE.svg|thumbnail|144px|Self energy diagram in QED.]] | |||

When developing [[quantum electrodynamics]] in the 1930s, [[Max Born]], [[Werner Heisenberg]], [[Pascual Jordan]], and [[Paul Dirac]] discovered that in perturbative calculations many integrals were divergent. | |||

One way of describing the divergences was discovered in the 1930s by [[Ernst Stueckelberg]], in the 1940s by [[Julian Schwinger]], [[Richard Feynman]], and [[Shin'ichiro Tomonaga]], and systematized by [[Freeman Dyson]]. The divergences appear in calculations involving [[Feynman diagram]]s with closed ''loops'' of [[virtual particle]]s in them. | |||

While virtual particles obey [[conservation of energy]] and [[momentum]], they can have any energy and momentum, even one that is not allowed by the relativistic energy-momentum relation for the observed mass of that particle. (That is, <math>E^2-p^2</math> is not necessarily the mass of the particle in that process (e.g. for a photon it could be nonzero).) Such a particle is called [[on shell|off-shell]]. When there is a loop, the momentum of the particles involved in the loop is not uniquely determined by the energies and momenta of incoming and outgoing particles. A variation in the energy of one particle in the loop must be balanced by an equal and opposite variation in the energy of another particle in the loop. So to find the amplitude for the loop process one must [[integral|integrate]] over ''all'' possible combinations of energy and momentum that could travel around the loop. | |||

These integrals are often ''divergent'', that is, they give infinite answers. The divergences which are significant are the "[[ultraviolet divergence|ultraviolet]]" (UV) ones. An ultraviolet divergence can be described as one which comes from | |||

* the region in the integral where all particles in the loop have large energies and momenta. | |||

* very short [[wavelength]]s and high [[frequency|frequencies]] fluctuations of the fields, in the [[Path integral formulation|path integral]] for the field. | |||

* Very short proper-time between particle emission and absorption, if the loop is thought of as a sum over particle paths. | |||

So these divergences are short-distance, short-time phenomena. | |||

There are exactly three one-loop divergent loop diagrams in quantum electrodynamics:<ref>See ch. 10 of "An Introduction To Quantum Field Theory", Michael E. Peskin And Daniel V. Schroeder, Sarat Book House, 2005</ref> | |||

# a photon creates a virtual electron-[[positron]] pair which then annihilate, this is a ''vacuum polarization'' diagram. | |||

# an electron which quickly emits and reabsorbs a virtual photon, called a ''self-energy''. | |||

# An electron emits a photon, emits a second photon, and reabsorbs the first. This process is shown in figure 2, and it is called a ''vertex renormalization''. The Feynman diagram for this is also called a [[penguin diagram]] due to its shape remotely resembling a penguin (with the initial and final state electrons as the arms and legs, the second photon as the body and the first looping photon as the head). | |||

The three divergences correspond to the three parameters in the theory: | |||

# the field normalization Z. | |||

# the mass of the electron. | |||

# the charge of the electron. | |||

A second class of divergence, called an [[infrared divergence]], is due to massless particles, like the photon. Every process involving charged particles emits infinitely many coherent photons of infinite wavelength, and the amplitude for emitting any finite number of photons is zero. For photons, these divergences are well understood. For example, at the 1-loop order, the vertex function has both ultraviolet and ''infrared'' divergences. In contrast to the ultraviolet divergence, the infrared divergence does not require the renormalization of a parameter in the theory. The infrared divergence of the vertex diagram is removed by including a diagram similar to the vertex diagram with the following important difference: the photon connecting the two legs of the electron is cut and replaced by two [[on shell]] (i.e. real) photons whose wavelengths tend to infinity; this diagram is equivalent to the [[bremsstrahlung]] process. This additional diagram must be included because there is no physical way to distinguish a zero-energy photon flowing through a loop as in the vertex diagram and zero-energy photons emitted through [[bremsstrahlung]]. From a mathematical point of view the IR divergences can be regularized by assuming fractional differentiation with respect to a parameter, for example <math> (p^{2}-a^{2})^{1/2} </math> is well defined at ''p'' = ''a'' but is UV divergent, if we take the 3/2-th [[fractional derivative]] with respect to <math> -a^{2} </math> we obtain the IR divergence <math> 1/(p^{2}-a^{2})</math>, so we can cure IR divergences by turning them into UV divergences.{{clarify|date=May 2012}} | |||

=== A loop divergence === | |||

The diagram in Figure 2 shows one of the several one-loop contributions to electron-electron scattering in QED. The electron on the left side of the diagram, represented by the solid line, starts out with four-momentum <math>p^\mu</math> and ends up with four-momentum <math>r^\mu</math>. It emits a virtual photon carrying <math>r^\mu - p^\mu</math> to transfer energy and momentum to the other electron. But in this diagram, before that happens, it emits another virtual photon carrying four-momentum <math>q^\mu</math>, and it reabsorbs this one after emitting the other virtual photon. Energy and momentum conservation do not determine the four-momentum <math>q^\mu</math> uniquely, so all possibilities contribute equally and we must integrate. | |||

This diagram's amplitude ends up with, among other things, a factor from the loop of | |||

:<math> | |||

-ie^3 \int {d^4 q \over (2\pi)^4} \gamma^\mu {i (\gamma^\alpha (r-q)_\alpha + m) \over (r-q)^2 - m^2 + i \epsilon} \gamma^\rho {i (\gamma^\beta (p-q)_\beta + m) \over (p-q)^2 - m^2 + i \epsilon} \gamma^\nu {-i g_{\mu\nu} \over q^2 + i\epsilon } | |||

</math> | |||

The various <math>\gamma^\mu</math> factors in this expression are [[gamma matrices]] as in the covariant formulation of the [[Dirac equation]]; they have to do with the spin of the electron. The factors of <math> e </math> are the electric coupling constant, while the <math> i\epsilon </math> provide a heuristic definition of the contour of integration around the poles in the space of momenta. The important part for our purposes is the dependency on <math>q^\mu</math> of the three big factors in the integrand, which are from the [[propagator]]s of the two electron lines and the photon line in the loop. | |||

This has a piece with two powers of <math>q^\mu</math> on top that dominates at large values of <math>q^\mu</math> (Pokorski 1987, p. 122): | |||

:<math> | |||

e^3 \gamma^\mu \gamma^\alpha \gamma^\rho \gamma^\beta \gamma_\mu \int {d^4 q \over (2\pi)^4}{q_\alpha q_\beta \over (r-q)^2 (p-q)^2 q^2} | |||

</math> | |||

This integral is divergent, and infinite unless we cut it off at finite energy and momentum in some way. | |||

Similar loop divergences occur in other quantum field theories. | |||

== Renormalized and bare quantities == | |||

{{Unreferenced section|date=December 2009}} | |||

The solution was to realize that the quantities initially appearing in the theory's formulae (such as the formula for the [[Lagrangian]]), representing such things as the electron's [[electric charge]] and [[mass]], as well as the normalizations of the quantum fields themselves, did ''not'' actually correspond to the physical constants measured in the laboratory. As written, they were ''bare'' quantities that did not take into account the contribution of virtual-particle loop effects to ''the physical constants themselves''. Among other things, these effects would include the quantum counterpart of the electromagnetic back-reaction that so vexed classical theorists of electromagnetism. In general, these effects would be just as divergent as the amplitudes under study in the first place; so finite measured quantities would in general imply divergent bare quantities. | |||

In order to make contact with reality, then, the formulae would have to be rewritten in terms of measurable, ''renormalized'' quantities. The charge of the electron, say, would be defined in terms of a quantity measured at a specific [[kinematics|kinematic]] ''renormalization point'' or ''subtraction point'' (which will generally have a characteristic energy, called the ''renormalization scale'' or simply the [[energy scale]]). The parts of the Lagrangian left over, involving the remaining portions of the bare quantities, could then be reinterpreted as ''counterterms'', involved in divergent diagrams exactly ''canceling out'' the troublesome divergences for other diagrams. | |||

===Renormalization in QED=== | |||

[[Image:Counterterm.png|thumbnail|250px|Figure 3. The vertex corresponding to the ''Z''<sub>1</sub> counterterm cancels the divergence in Figure 2.]] | |||

For example, in the [[quantum electrodynamics|Lagrangian of QED]] | |||

:<math>\mathcal{L}=\bar\psi_B\left[i\gamma_\mu (\partial^\mu + ie_BA_B^\mu)-m_B\right]\psi_B -\frac{1}{4}F_{B\mu\nu}F_B^{\mu\nu}</math> | |||

the fields and coupling constant are really ''bare'' quantities, hence the subscript <math>B</math> above. Conventionally the bare quantities are written so that the corresponding Lagrangian terms are multiples of the renormalized ones: | |||

:<math>\left(\bar\psi m \psi\right)_B = Z_0 \, \bar\psi m \psi</math> | |||

:<math>\left(\bar\psi (\partial^\mu + ieA^\mu)\psi\right)_B = Z_1 \, \bar\psi(\partial^\mu + ieA^\mu)\psi</math> | |||

:<math>\left(F_{\mu\nu}F^{\mu\nu}\right)_B = Z_3\, F_{\mu\nu}F^{\mu\nu}.</math> | |||

([[Gauge invariance]], via a [[Ward–Takahashi identity]], turns out to imply that we can renormalize the two terms of the [[covariant derivative]] piece <math>\bar \psi (\partial + ieA) \psi</math> together (Pokorski 1987, p. 115), which is what happened to <math>Z_2</math>; it is the same as <math>Z_1</math>.) | |||

A term in this Lagrangian, for example, the electron-photon interaction pictured in Figure 1, can then be written | |||

:<math>\mathcal{L}_I = -e \bar\psi \gamma_\mu A^\mu \psi \, - \, (Z_1 - 1) e \bar\psi \gamma_\mu A^\mu \psi</math> | |||

The physical constant <math>e</math>, the electron's charge, can then be defined in terms of some specific experiment; we set the renormalization scale equal to the energy characteristic of this experiment, and the first term gives the interaction we see in the laboratory (up to small, finite corrections from loop diagrams, providing such exotica as the high-order corrections to the [[magnetic moment]]). The rest is the counterterm. If the theory is ''renormalizable'' (see below for more on this), as it is in QED, the ''divergent'' parts of loop diagrams can all be decomposed into pieces with three or fewer legs, with an algebraic form that can be canceled out by the second term (or by the similar counterterms that come from <math>Z_0</math> and <math>Z_3</math>). | |||

The diagram with the <math>Z_1</math> counterterm's interaction vertex placed as in Figure 3 cancels out the divergence from the loop in Figure 2. | |||

The splitting of the "bare terms" into the original terms and counterterms came before the [[renormalization group]] insights<ref>K.G. Wilson (1975), "The renormalization group: critical phenomena and the Kondo problem", ''Rev. Mod. Phys.'' '''47''', 4, 773.</ref> due to [[Kenneth G. Wilson|Kenneth Wilson]]. According to such [[renormalization group]] insights, this splitting is unnatural and actually unphysical. | |||

===Running couplings=== | |||

To minimize the contribution of loop diagrams to a given calculation (and therefore make it easier to extract results), one chooses a renormalization point close to the energies and momenta actually exchanged in the interaction. However, the renormalization point is not itself a physical quantity: the physical predictions of the theory, calculated to all orders, should in principle be ''independent'' of the choice of renormalization point, as long as it is within the domain of application of the theory. Changes in renormalization scale will simply affect how much of a result comes from Feynman diagrams without loops, and how much comes from the leftover finite parts of loop diagrams. One can exploit this fact to calculate the effective variation of [[Coupling constant|physical constants]] with changes in scale. This variation is encoded by [[beta-function]]s, and the general theory of this kind of scale-dependence is known as the [[renormalization group]]. | |||

Colloquially, particle physicists often speak of certain physical "constants" as varying with the energy of an interaction, though in fact it is the renormalization scale that is the independent quantity. This [[Coupling constant#Running coupling|''running'']] does, however, provide a convenient means of describing changes in the behavior of a field theory under changes in the energies involved in an interaction. For example, since the coupling in [[quantum chromodynamics]] becomes small at large energy scales, the theory behaves more like a free theory as the energy exchanged in an interaction becomes large, a phenomenon known as [[asymptotic freedom]]. Choosing an increasing energy scale and using the renormalization group makes this clear from simple Feynman diagrams; were this not done, the prediction would be the same, but would arise from complicated high-order cancellations. | |||

For example, <math>I=\int_0^a \frac{1}{z}\,dz-\int_0^b \frac{1}{z}\,dz=\ln a-\ln b-\ln 0 +\ln 0</math> is ill defined. | |||

To eliminate the divergence, simply change lower limit of integral into <math>\epsilon_a \,</math> and <math>\epsilon_b \,</math>:<math>I=\ln{\dfrac{a}{b}}-\ln{\epsilon_a}+\ln{\epsilon_b}</math> | |||

Making sure <math>\epsilon_b/\epsilon_a \to 1</math>, then <math>I=\ln \dfrac{a}{b}</math>. | |||

== Regularization == | |||

{{Unreferenced section|date=December 2009}} | |||

Since the quantity <math>\infty - \infty</math> is ill-defined, in order to make this notion of canceling divergences precise, the divergences first have to be tamed mathematically using the [[limit (mathematics)|theory of limits]], in a process known as [[regularization (physics)|regularization]]. | |||

An essentially arbitrary modification to the loop integrands, or ''regulator'', can make them drop off faster at high energies and momenta, in such a manner that the integrals converge. A regulator has a characteristic energy scale known as the [[cutoff]]; taking this cutoff to infinity (or, equivalently, the corresponding length/time scale to zero) recovers the original integrals. | |||

With the regulator in place, and a finite value for the cutoff, divergent terms in the integrals then turn into finite but cutoff-dependent terms. After canceling out these terms with the contributions from cutoff-dependent counterterms, the cutoff is taken to infinity and finite physical results recovered. If physics on scales we can measure is independent of what happens at the very shortest distance and time scales, then it should be possible to get cutoff-independent results for calculations. | |||

Many different types of regulator are used in quantum field theory calculations, each with its advantages and disadvantages. One of the most popular in modern use is ''[[dimensional regularization]]'', invented by [[Gerardus 't Hooft]] and [[Martinus J. G. Veltman]],<ref>{{cite doi|10.1016/0550-3213(72)90279-9|noedit}}</ref> which tames the integrals by carrying them into a space with a fictitious fractional number of dimensions. Another is ''[[Pauli–Villars regularization]]'', which adds fictitious particles to the theory with very large masses, such that loop integrands involving the massive particles cancel out the existing loops at large momenta. | |||

Yet another regularization scheme is the ''[[Lattice field theory|Lattice regularization]]'', introduced by [[Kenneth G. Wilson|Kenneth Wilson]], which pretends that our space-time is constructed by hyper-cubical lattice with fixed grid size. This size is a natural cutoff for the maximal momentum that a particle could possess when propagating on the lattice. And after doing calculation on several lattices with different grid size, the physical result is [[extrapolate]]d to grid size 0, or our natural universe. This presupposes the existence of a [[scaling limit]]. | |||

A rigorous mathematical approach to renormalization theory is the so-called [[causal perturbation theory]], where ultraviolet divergences are avoided from the start in calculations by performing well-defined mathematical operations only within the framework of [[Distribution (mathematics)|distribution]] theory. The disadvantage of the method is the fact that the approach is quite technical and requires a high level of mathematical knowledge. | |||

=== Zeta function regularization === | |||

[[Julian Schwinger]] discovered a relationship{{citation needed|date=June 2012}} between [[zeta function regularization]] and renormalization, using the asymptotic relation: | |||

:<math> I(n, \Lambda )= \int_0^{\Lambda }dp\,p^n \sim 1+2^n+3^n+\cdots+ \Lambda^n \rightarrow \zeta(-n)</math> | |||

as the regulator <math>\Lambda \rightarrow \infty</math>. Based on this, he considered using the values of <math>\zeta(-n)</math> to get finite results. Although he reached inconsistent results, an improved formula studied by [[Hartle]], J. Garcia, and based on the works by E. Elizalde includes the technique of the [[zeta regularization]] algorithm | |||

:<math> I(n, \Lambda) = \frac{n}{2}I(n-1, \Lambda) + \zeta(-n) - \sum_{r=1}^{\infty}\frac{B_{2r}}{(2r)!} a_{n,r}(n-2r+1) I(n-2r, \Lambda),</math> | |||

where the ''B'''s are the [[Bernoulli number]]s and | |||

:<math>a_{n,r}= \frac{\Gamma(n+1)}{\Gamma(n-2r+2)}.</math> | |||

So every <math> I(m,\Lambda ) </math> can be written as a linear combination of <math> \zeta(-1),\zeta(-3),\zeta(-5),\ldots, \zeta(-m) </math> | |||

Or simply using [[Abel–Plana formula]] we have for every divergent integral: | |||

<math> \zeta(-m, \beta )-\frac{\beta ^{m}}{2}-i\int_ 0 ^{\infty}dt \frac{ (it+\beta)^{m}-(-it+\beta)^{m}}{e^{2 \pi t}-1}=\int_0^\infty dp \, (p+\beta)^m </math> valid when ''m'' > 0, Here the zeta function is [[Hurwitz zeta function]] and Beta is a positive real number. | |||

The "geometric" analogy is given by, (if we use [[rectangle method]]) to evaluate the integral so: | |||

<math> \int_0^\infty dx \, (\beta +x)^m \approx \sum_{n=0}^\infty h^{m+1} \zeta( \beta h^{-1} , -m) </math> | |||

Using Hurwitz zeta regularization plus the rectangle method with step h (not to be confused with [[Planck's constant]]). | |||

The logarithmic divergent integral has the regularization <math> \sum_{n=0}^{\infty} \frac{1}{n+a}= - \psi (a) </math> | |||

For [[multi-loop integrals]] that will depend on several variables <math> k_1 , k_2 , \ldots, k_n </math> we can make a change of variables to polar coordinates and then replace the integral over the angles <math> \int d \Omega </math> by a sum so we have only a divergent integral, that will depend on the modulus <math> r^2 = k_1^2 + k_2^2 +\cdots+k_n^2 </math> and then we can apply the zeta regularization algorithm, the main idea for multi-loop integrals is to replace the factor <math> F(q_1 , q_2 ,\ldots,q_n) </math> after a change to hyperspherical coordinates <math> F(r,\Omega ) </math> so the UV overlapping divergences are encoded in variable ''r''. In order to regularize these integrals one needs a regulator, for the case of multi-loop integrals, these regulator can be taken as <math> (1+ \sqrt q_{i}q^{i} )^{-s} </math> so the multi-loop integral will converge for big enough 's' using the Zeta regularization we can analytic continue the variable 's' to the physical limit where s=0 and then regularize any UV integral, by replacing a divergent integral by a linear combination of divergent series, which can be regularized in terms of the negative values of the Riemann zeta function <math> \zeta (-m) </math> | |||

== Attitudes and interpretation == | |||

The early formulators of QED and other quantum field theories were, as a rule, dissatisfied with this state of affairs. It seemed illegitimate to do something tantamount to subtracting infinities from infinities to get finite answers. | |||

[[Freeman Dyson]] has shown that these infinities are of a basic nature and cannot be eliminated by any formal mathematical procedures, such as the renormalization method.<ref>F. J. Dyson, ''Phys. Rev.'' '''85''' (1952) 631.</ref><ref>A. W. Stern, ''Science'' '''116''' (1952) 493.</ref> | |||

[[Paul Dirac|Dirac]]'s criticism was the most persistent.<ref>P.A.M. Dirac, "The Evolution of the Physicist's Picture of Nature," in Scientific American, May 1963, p. 53.</ref> As late as 1975, he was saying:<ref>Kragh, Helge ; ''Dirac: A scientific biography'', CUP 1990, p. 184</ref> | |||

:Most physicists are very satisfied with the situation. They say: 'Quantum electrodynamics is a good theory and we do not have to worry about it any more.' I must say that I am very dissatisfied with the situation, because this so-called 'good theory' does involve neglecting infinities which appear in its equations, neglecting them in an arbitrary way. This is just not sensible mathematics. Sensible mathematics involves neglecting a quantity when it is small - not neglecting it just because it is infinitely great and you do not want it! | |||

Another important critic was [[Richard Feynman|Feynman]]. Despite his crucial role in the development of quantum electrodynamics, he wrote the following in 1985:<ref>Feynman, Richard P. ; ''QED, The Strange Theory of Light and Matter'', Penguin 1990, p. 128</ref> | |||

:The shell game that we play ... is technically called 'renormalization'. But no matter how clever the word, it is still what I would call a dippy process! Having to resort to such hocus-pocus has prevented us from proving that the theory of quantum electrodynamics is mathematically self-consistent. It's surprising that the theory still hasn't been proved self-consistent one way or the other by now; I suspect that renormalization is not mathematically legitimate. | |||

While Dirac's criticism was based on the procedure of renormalization itself, Feynman's criticism was very different. Feynman was concerned that all field theories known in the 1960s had the property that the interactions become infinitely strong at short enough distance scales. This property, called a [[Landau pole]], made it plausible that quantum field theories were all inconsistent. In 1974, [[David Gross|Gross]], [[David Politzer|Politzer]] and [[Frank Wilczek|Wilczek]] showed that another quantum field theory, [[quantum chromodynamics]], does not have a Landau pole. Feynman, along with most others, accepted that QCD was a fully consistent theory.{{Citation needed|date=December 2009}} | |||

The general unease was almost universal in texts up to the 1970s and 1980s. Beginning in the 1970s, however, inspired by work on the [[renormalization group]] and [[effective field theory]], and despite the fact that Dirac and various others—all of whom belonged to the older generation—never withdrew their criticisms, attitudes began to change, especially among younger theorists. [[Kenneth G. Wilson]] and others demonstrated that the renormalization group is useful in [[statistical mechanics|statistical]] field theory applied to [[condensed matter physics]], where it provides important insights into the behavior of [[phase transition]]s. In condensed matter physics, a ''real'' short-distance regulator exists: [[matter]] ceases to be continuous on the scale of [[atom]]s. Short-distance divergences in condensed matter physics do not present a philosophical problem, since the field theory is only an effective, smoothed-out representation of the behavior of matter anyway; there are no infinities since the cutoff is actually always finite, and it makes perfect sense that the bare quantities are cutoff-dependent. | |||

If [[Quantum field theory|QFT]] holds all the way down past the [[Planck length]] (where it might yield to [[string theory]], [[causal set theory]] or something different), then there may be no real problem with short-distance divergences in [[particle physics]] either; ''all'' field theories could simply be effective field theories. In a sense, this approach echoes the older attitude that the divergences in QFT speak of human ignorance about the workings of nature, but also acknowledges that this ignorance can be quantified and that the resulting effective theories remain useful. | |||

Be that as it may [[Abdus Salam|Salam]]'s remark <ref>C.J.Isham, A.Salam and J.Strathdee, `Infinity Suppression Gravity Modified Quantum Electrodynamics,' Phys. Rev. D5, 2548 (1972)</ref> in 1972 seems still relevant | |||

: Field-theoretic infinities first encountered in Lorentz's computation of electron have persisted in classical electrodynamics for seventy and in quantum electrodynamics for some thirty-five years. These long years of frustration have left in the subject a curious affection for the infinities and a passionate belief that they are an inevitable part of nature; so much so that even the suggestion of a hope that they may after all be circumvented — and finite values for the renormalization constants computed — is considered irrational. Compare [[Bertrand Russell|Russell]]'s postscript to the third volume of his Autobiography The Final Tears, 1944-1967 (George Allen and Unwin, Ltd., London 1969) p.221: In the modern world, if communities are unhappy, it is often because they have ignorances, habits, beliefs, and passions, which are dearer to them than happiness or even life. I find many men in our dangerous age who seem to be in love with misery and death, and who grow angry when hopes are suggested to them. They think hope is irrational and that, in sitting down to lazy despair, they are merely facing facts. | |||

In QFT, the value of a physical constant, in general, depends on the scale that one chooses as the renormalization point, and it becomes very interesting to examine the renormalization group running of physical constants under changes in the energy scale. The coupling constants in the [[Standard Model]] of particle physics vary in different ways with increasing energy scale: the coupling of [[quantum chromodynamics]] and the weak isospin coupling of the [[electroweak force]] tend to decrease, and the weak hypercharge coupling of the electroweak force tends to increase. At the colossal energy scale of 10<sup>15</sup> [[GeV]] (far beyond the reach of our current [[particle accelerator]]s), they all become approximately the same size (Grotz and Klapdor 1990, p. 254), a major motivation for speculations about [[grand unified theory]]. Instead of being only a worrisome problem, renormalization has become an important theoretical tool for studying the behavior of field theories in different regimes. | |||

If a theory featuring renormalization (e.g. QED) can only be sensibly interpreted as an effective field theory, i.e. as an approximation reflecting human ignorance about the workings of nature, then the problem remains of discovering a more accurate theory that does not have these renormalization problems. As [[Lewis Ryder]] has put it, "In the Quantum Theory, these [classical] divergences do not disappear; on the contrary, they appear to get worse. And despite the comparative success of renormalisation theory the feeling remains that there ought to be a more satisfactory way of doing things."<ref>Ryder, Lewis. ''[http://books.google.com/books?id=L9YhYS7gcXAC&pg=PP1&dq=%22Quantum+Field+Theory%22+and+Ryder&ei=dlFASOTZJpyMjAGcruWIBQ&sig=n13YWlsiuRr81SgqQ0ng9nMDOX8#PPA390,M1 Quantum Field Theory]'', page 390 (Cambridge University Press 1996).</ref> | |||

== Renormalizability == | |||

From this philosophical reassessment a new concept follows naturally: the notion of renormalizability. Not all theories lend themselves to renormalization in the manner described above, with a finite supply of counterterms and all quantities becoming cutoff-independent at the end of the calculation. If the Lagrangian contains combinations of field operators of high enough [[dimensional analysis|dimension]] in energy units, the counterterms required to cancel all divergences proliferate to infinite number, and, at first glance, the theory would seem to gain an infinite number of free parameters and therefore lose all predictive power, becoming scientifically worthless. Such theories are called ''nonrenormalizable''. | |||

The [[Standard Model]] of particle physics contains only renormalizable operators, but the interactions of [[general relativity]] become nonrenormalizable operators if one attempts to construct a field theory of [[quantum gravity]] in the most straightforward manner{{how|date=June 2012}}, suggesting that [[perturbation theory (quantum mechanics)|perturbation theory]] is useless in application to quantum gravity. | |||

However, in an [[effective field theory]], "renormalizability" is, strictly speaking, a [[misnomer]]. In a nonrenormalizable effective field theory, terms in the Lagrangian do multiply to infinity, but have coefficients suppressed by ever-more-extreme inverse powers of the energy cutoff. If the cutoff is a real, physical quantity—if, that is, the theory is only an effective description of physics up to some maximum energy or minimum distance scale—then these extra terms could represent real physical interactions. Assuming that the dimensionless constants in the theory do not get too large, one can group calculations by inverse powers of the cutoff, and extract approximate predictions to finite order in the cutoff that still have a finite number of free parameters. It can even be useful to renormalize these "nonrenormalizable" interactions. | |||

Nonrenormalizable interactions in effective field theories rapidly become weaker as the energy scale becomes much smaller than the cutoff. The classic example is the [[Fermi's interaction|Fermi theory]] of the [[weak nuclear force]], a nonrenormalizable effective theory whose cutoff is comparable to the mass of the [[W particle]]. This fact may also provide a possible explanation for ''why'' almost all of the particle interactions we see are describable by renormalizable theories. It may be that any others that may exist at the GUT or Planck scale simply become too weak to detect in the realm we can observe, with one exception: [[gravity]], whose exceedingly weak interaction is magnified by the presence of the enormous masses of [[star]]s and [[planet]]s.{{Citation needed|date=February 2010}} | |||

==Renormalization schemes== | |||

In actual calculations, the counterterms introduced to cancel the divergences in Feynman diagram calculations beyond tree level must be ''fixed'' using a set of ''renormalization conditions''. The common renormalization schemes in use include: | |||

* [[Minimal subtraction scheme|Minimal subtraction (MS) scheme]] and the related modified minimal subtraction (MS-bar) scheme | |||

* [[On shell renormalization scheme|On-shell scheme]] | |||

==Application in statistical physics== | |||

As mentioned in the introduction, the methods of renormalization have been applied to [[Statistical Physics]], namely to the problems of the critical behaviour near second-order phase transitions, in particular at fictitious spatial dimensions just below the number of 4, where the above-mentioned methods could even be sharpened (i.e., instead of "renormalizability" one gets "super-renormalizability"), which allowed extrapolation to the real spatial dimensionality for phase transitions, 3. Details can be found in the book of Zinn-Justin, mentioned below. | |||

For the discovery of these unexpected applications, and working out the details, in 1982 the physics Nobel prize was awarded to [[Kenneth G. Wilson]]. | |||

== See also == | |||

{{Div col}} | |||

* [[Effective field theory]] | |||

* [[Landau pole]] | |||

* [[Quantum field theory]] | |||

* [[Quantum triviality]] | |||

* [[Regularization (physics)|Regularization]] | |||

* [[Renormalization group]] | |||

* [[Ward–Takahashi identity]] | |||

* [[Zeta function regularization]] | |||

* [[Zeno's paradoxes]] | |||

{{Div col end}} | |||

== Further reading == | |||

=== General introduction === | |||

* Delamotte, Bertrand ; {{doi-inline|10.1119/1.1624112|''A hint of renormalization''}}, American Journal of Physics 72 (2004) pp. 170–184. Beautiful elementary introduction to the ideas, no prior knowledge of field theory being necessary. Full text available at: [http://arxiv.org/abs/hep-th/0212049 ''hep-th/0212049''] | |||

* Baez, John ; [http://math.ucr.edu/home/baez/renormalization.html ''Renormalization Made Easy''], (2005). A qualitative introduction to the subject. | |||

* Blechman, Andrew E. ; [http://www.pha.jhu.edu/~blechman/papers/renormalization/ ''Renormalization: Our Greatly Misunderstood Friend''], (2002). Summary of a lecture; has more information about specific regularization and divergence-subtraction schemes. | |||

* Cao, Tian Yu & Schweber, Silvan S. ; {{doi-inline|10.1007/BF01255832|''The Conceptual Foundations and the Philosophical Aspects of Renormalization Theory''}}, Synthese, 97(1) (1993), 33–108. | |||

* [[Dmitry Shirkov|Shirkov, Dmitry]] ; ''Fifty Years of the Renormalization Group'', C.E.R.N. Courrier 41(7) (2001). Full text available at : [http://www.cerncourier.com/main/article/41/7/14 ''I.O.P Magazines'']. | |||

* E. Elizalde ; ''Zeta regularization techniques with Applications''. | |||

=== Mainly: quantum field theory === | |||

*[[Nikolay Bogoliubov|N. N. Bogoliubov]], [[Dmitry Shirkov|D. V. Shirkov]] (1959): ''The Theory of Quantized Fields''. New York, Interscience. The first text-book on the [[renormalization group]] theory. | |||

* Ryder, Lewis H. ; ''Quantum Field Theory '' (Cambridge University Press, 1985), ISBN 0-521-33859-X Highly readable textbook, certainly the best introduction to relativistic Q.F.T. for particle physics. | |||

* Zee, Anthony ; ''Quantum Field Theory in a Nutshell'', Princeton University Press (2003) ISBN 0-691-01019-6. Another excellent textbook on Q.F.T. | |||

* Weinberg, Steven ; ''The Quantum Theory of Fields'' (3 volumes) Cambridge University Press (1995). A monumental treatise on Q.F.T. written by a leading expert, [http://nobelprize.org/physics/laureates/1979/weinberg-lecture.html ''Nobel laureate 1979'']. | |||

* Pokorski, Stefan ; ''Gauge Field Theories'', Cambridge University Press (1987) ISBN 0-521-47816-2. | |||

* 't Hooft, Gerard ; ''The Glorious Days of Physics – Renormalization of Gauge theories'', lecture given at Erice (August/September 1998) by the [http://nobelprize.org/physics/laureates/1999/thooft-autobio.html ''Nobel laureate 1999''] . Full text available at: [http://fr.arxiv.org/abs/hep-th/9812203 ''hep-th/9812203'']. | |||

* Rivasseau, Vincent ; ''An introduction to renormalization'', Poincaré Seminar (Paris, Oct. 12, 2002), published in : Duplantier, Bertrand; Rivasseau, Vincent (Eds.) ; ''Poincaré Seminar 2002'', Progress in Mathematical Physics 30, Birkhäuser (2003) ISBN 3-7643-0579-7. Full text available in [http://www.bourbaphy.fr/Rivasseau.ps ''PostScript'']. | |||

* Rivasseau, Vincent ; ''From perturbative to constructive renormalization'', Princeton University Press (1991) ISBN 0-691-08530-7. Full text available in [http://cpth.polytechnique.fr/cpth/rivass/articles/book.ps ''PostScript'']. | |||

* Iagolnitzer, Daniel & Magnen, J. ; ''Renormalization group analysis'', Encyclopaedia of Mathematics, Kluwer Academic Publisher (1996). Full text available in PostScript and pdf [http://www-spht.cea.fr/articles/t96/037/ ''here'']. | |||

* Scharf, Günter; ''Finite quantum electrodynamics: The causal approach'', Springer Verlag Berlin Heidelberg New York (1995) ISBN 3-540-60142-2. | |||

* A. S. Švarc ([[Albert Schwarz]]), Математические основы квантовой теории поля, (Mathematical aspects of quantum field theory), Atomizdat, Moscow, 1975. 368 pp. | |||

=== Mainly: statistical physics === | |||

* A. N. Vasil'ev ''The Field Theoretic Renormalization Group in Critical Behavior Theory and Stochastic Dynamics'' (Routledge Chapman & Hall 2004); ISBN 978-0-415-31002-4 | |||

* Nigel Goldenfeld ; ''Lectures on Phase Transitions and the Renormalization Group'', Frontiers in Physics 85, Westview Press (June, 1992) ISBN 0-201-55409-7. Covering the elementary aspects of the physics of phases transitions and the renormalization group, this popular book emphasizes understanding and clarity rather than technical manipulations. | |||

* Zinn-Justin, Jean ; ''Quantum Field Theory and Critical Phenomena'', Oxford University Press (4th edition – 2002) ISBN 0-19-850923-5. A masterpiece on applications of renormalization methods to the calculation of critical exponents in statistical mechanics, following Wilson's ideas (Kenneth Wilson was [http://nobelprize.org/physics/laureates/1982/wilson-autobio.html ''Nobel laureate 1982'']). | |||

* Zinn-Justin, Jean ; ''Phase Transitions & Renormalization Group: from Theory to Numbers'', Poincaré Seminar (Paris, Oct. 12, 2002), published in : Duplantier, Bertrand; Rivasseau, Vincent (Eds.) ; ''Poincaré Seminar 2002'', Progress in Mathematical Physics 30, Birkhäuser (2003) ISBN 3-7643-0579-7. Full text available in [http://parthe.lpthe.jussieu.fr/poincare/textes/octobre2002/Zinn.ps ''PostScript'']. | |||

* Domb, Cyril ; ''The Critical Point: A Historical Introduction to the Modern Theory of Critical Phenomena'', CRC Press (March, 1996) ISBN 0-7484-0435-X. | |||

* Brown, Laurie M. (Ed.) ; ''Renormalization: From Lorentz to Landau (and Beyond)'', Springer-Verlag (New York-1993) ISBN 0-387-97933-6. | |||

* [[John Cardy|Cardy, John]] ; ''Scaling and Renormalization in Statistical Physics'', Cambridge University Press (1996) ISBN 0-521-49959-3. | |||

=== Miscellaneous === | |||

* [[Dmitry Shirkov|Shirkov, Dmitry]] ; ''The Bogoliubov Renormalization Group'', JINR Communication E2-96-15 (1996). Full text available at: [http://arxiv.org/abs/hep-th/9602024 ''hep-th/9602024''] | |||

* García Moreta, José Javier http://prespacetime.com/index.php/pst/article/view/498 The Application of Zeta Regularization Method to the Calculation of Certain Divergent Series and Integrals Refined Higgs, CMB from Planck, Departures in Logic, and GR Issues & Solutions vol 4 Nº 3 prespacetime journal http://prespacetime.com/index.php/pst/issue/view/41/showToc | |||

* Zinn Justin, Jean ; ''Renormalization and renormalization group: From the discovery of UV divergences to the concept of effective field theories'', in: de Witt-Morette C., Zuber J.-B. (eds), Proceedings of the NATO ASI on ''Quantum Field Theory: Perspective and Prospective'', June 15–26, 1998, Les Houches, France, Kluwer Academic Publishers, NATO ASI Series C 530, 375–388 (1999). Full text available in [http://www-spht.cea.fr/articles/t98/118/ ''PostScript'']. | |||

* Connes, Alain ; ''Symétries Galoisiennes & Renormalisation'', Poincaré Seminar (Paris, Oct. 12, 2002), published in : Duplantier, Bertrand; Rivasseau, Vincent (Eds.) ; ''Poincaré Seminar 2002'', Progress in Mathematical Physics 30, Birkhäuser (2003) ISBN 3-7643-0579-7. French mathematician [http://www.alainconnes.org ''Alain Connes''] (Fields medallist 1982) describe the mathematical underlying structure (the [[Hopf algebra]]) of renormalization, and its link to the Riemann-Hilbert problem. Full text (in French) available at [http://arxiv.org/pdf/math/0211199v1 ''math/0211199v1'']. | |||

== References == | |||

{{reflist}} | |||

[[Category:Concepts in physics]] | |||

[[Category:Particle physics]] | |||

[[Category:Quantum field theory]] | |||

[[Category:Renormalization group]] | |||

[[Category:Mathematical physics]] | |||

Latest revision as of 02:26, 3 January 2014

Over 25,000 Expat pleasant property listings provided by licensed Singapore property brokers Welcome to Singapore Property For Sale! That includes Singapore, JB Iskandar, London, Australia, Philipiines, Cambodia Property! Cellular Apps FREE Sign Up Log in Property Agents Suggestions Welcome to OrangeTee! Singapore's Largest Property & Real Property Firm. Have the recent cooling measures been restricting you ininvesting property in Singapore? Take into account London property as anoption.

Investors will likely want to get a breakdown of the industrial properties that individuals can discover after they view the Hexacube There are actually 5 distinct levels of the complicated, plus an extra basement level. All of those ranges will provide their very own unique design, which can undoubtedly appeal to many individuals on the market. Some guests will want to try how they can take a tour of the complex. This can assist them visualize how they will arrange buildings all through these locations in only a short amount of time. This could possibly be a useful asset to house owners who must study more about how they will improve the workplace areas that they can use right here.

My point? Based on my expertise and what I have noticed, investing in property is the commonest manner for the common person to build up a big amount of wealth However is not it risky to put money into property now? Are banks nonetheless keen to do property lending? What an investor should test before committing to a property purchase How changes in laws will affect the prospects for an en bloc sale GETTING YOUR PROPERTY SOLD / LEASED? Your Property Might Be Featured Right here! Condominium For Rent – Tribeca by the Waterfront (D09) Yong An Park (D09) – Condominium For Hire The Glyndebourne (D11) – Condominium For Lease Scotts 28 (D09) – Condominium For Hire Condominium For Hire – The Balmoral (D10) Learn on for a few of the hot property within the Featured Listings under.

Solely Singapore citizens and accredited persons should buy Landed 'residential property'as outlined within the Residential Properties Act. Foreigners are eligible to purchaseunits in condominiums or flats which aren't landed dwelling homes. Foreignerswho want to purchase landed property in Singapore should first seek the approval ofthe Controller of Residential Property. Your Lawyer's Position in a Property Buy The most important mistakes Singapore property buyers and novices make Secrets of Singapore Property Gurus assessment by Way of life magazine The Laurels is a freehold apartment growth at Cairnhill Highway, Singapore (District 9). Property developer of recent apatments. 107 Tampines Highway, Singapore 535129. Search HDB Flats For Sale by Estate Industrial Properties

Should you're an expert investor, consider the "on the market by proprietor" properties. For sale by proprietor properties often current a unbelievable investment opportunity for buyers that are acquainted with this method. If you do not need to regret your New Ec launch singapore house buy, it is best to pay careful attention to the neighborhood where the house is located. Test the speedy space and see if there are a lot of properties on the market. Test for closed companies, closed faculties or numerous accessible rentals. Any of these items could level to a decline within the neighborhood. Houses for Sale Singapore Tip

In Yr 2013, c ommercial retails, shoebox residences and mass market properties continued to be the celebs of the property market. Units are snapped up in document time and at document breaking prices. Developers are having fun with overwhelming demand and consumers want more. We feel that these segments of the property market are booming is a repercussion of the property cooling measures no.6 and no. 7. With additional buyer's stamp duty imposed on residential properties, traders switch their focus to business and industrial properties. I consider every property purchasers need their property investment to appreciate in worth.

Template:Renormalization and regularization

In quantum field theory, the statistical mechanics of fields, and the theory of self-similar geometric structures, renormalization is any of a collection of techniques used to treat infinities arising in calculated quantities.

When describing space and time as a continuum, certain statistical and quantum mechanical constructions are ill defined. To define them, the continuum limit has to be taken carefully.

Renormalization establishes a relationship between parameters in the theory, when the parameters describing large distance scales differ from the parameters describing small distances. Renormalization was first developed in quantum electrodynamics (QED) to make sense of infinite integrals in perturbation theory. Initially viewed as a suspicious provisional procedure even by some of its originators, renormalization eventually was embraced as an important and self-consistent tool in several fields of physics and mathematics.

Self-interactions in classical physics

The problem of infinities first arose in the classical electrodynamics of point particles in the 19th and early 20th century.

The mass of a charged particle should include the mass-energy in its electrostatic field (Electromagnetic mass). Assume that the particle is a charged spherical shell of radius . The mass-energy in the field is

which becomes infinite in the limit as approaches zero. This implies that the point particle would have infinite inertia, making it unable to be accelerated. Incidentally, the value of that makes equal to the electron mass is called the classical electron radius, which (setting and restoring factors of and ) turns out to be

where is the fine structure constant, and is the Compton wavelength of the electron.

The total effective mass of a spherical charged particle includes the actual bare mass of the spherical shell (in addition to the aforementioned mass associated with its electric field). If the shell's bare mass is allowed to be negative, it might be possible to take a consistent point limit.[1] This was called renormalization, and Lorentz and Abraham attempted to develop a classical theory of the electron this way. This early work was the inspiration for later attempts at regularization and renormalization in quantum field theory.

When calculating the electromagnetic interactions of charged particles, it is tempting to ignore the back-reaction of a particle's own field on itself. But this back reaction is necessary to explain the friction on charged particles when they emit radiation. If the electron is assumed to be a point, the value of the back-reaction diverges, for the same reason that the mass diverges, because the field is inverse-square.

The Abraham–Lorentz theory had a noncausal "pre-acceleration". Sometimes an electron would start moving before the force is applied. This is a sign that the point limit is inconsistent. An extended body will start moving when a force is applied within one radius of the center of mass.

The trouble was worse in classical field theory than in quantum field theory, because in quantum field theory a charged particle experiences Zitterbewegung due to interference with virtual particle-antiparticle pairs, thus effectively smearing out the charge over a region comparable to the Compton wavelength. In quantum electrodynamics at small coupling the electromagnetic mass only diverges as the logarithm of the radius of the particle.

Divergences in quantum electrodynamics

Before you choose any particular company it is vital to understand in full how the different plans can vary. There is no other better method than to create a message board so that people can relax and "chill" on your website and check out your articles more. You should read the HostGator review, even before registering with a web hosting company. but Hostgator in addition considers the surroundings. You can even use a Hostgator reseller coupon for unlimited web hosting at HostGator! Most of individuals by no means go for yearly subscription and choose month to month subscription. Several users commented that this was the deciding factor in picking HostGator but in any case there is a 45 day Money Back Guarantee and there is no contract so you can cancel at any time. GatorBill is able to send you an email notice about the new invoice. In certain cases a dedicated server can offer less overhead and a bigger revenue in investments. With the plan come a Free Billing Executive, Free sellers account and Free Hosting Templates.

This is one of the only things that require you to spend a little money to make money. Just go make an account, get a paypal account, and start selling. To go one step beyond just affiliating products and services is to create your own and sell it through your blog. Not great if you really enjoy trying out all the themes. Talking in real time having a real person causes it to be personal helping me personally to sort out how to proceed. The first step I took was search for a discount code, as I did with HostGator. Using a HostGator coupon is a beneficial method to get started. As long as the necessities are able to preserve the horizontal functionality of your site, you would pretty much be fine.

When developing quantum electrodynamics in the 1930s, Max Born, Werner Heisenberg, Pascual Jordan, and Paul Dirac discovered that in perturbative calculations many integrals were divergent.

One way of describing the divergences was discovered in the 1930s by Ernst Stueckelberg, in the 1940s by Julian Schwinger, Richard Feynman, and Shin'ichiro Tomonaga, and systematized by Freeman Dyson. The divergences appear in calculations involving Feynman diagrams with closed loops of virtual particles in them.

While virtual particles obey conservation of energy and momentum, they can have any energy and momentum, even one that is not allowed by the relativistic energy-momentum relation for the observed mass of that particle. (That is, is not necessarily the mass of the particle in that process (e.g. for a photon it could be nonzero).) Such a particle is called off-shell. When there is a loop, the momentum of the particles involved in the loop is not uniquely determined by the energies and momenta of incoming and outgoing particles. A variation in the energy of one particle in the loop must be balanced by an equal and opposite variation in the energy of another particle in the loop. So to find the amplitude for the loop process one must integrate over all possible combinations of energy and momentum that could travel around the loop.

These integrals are often divergent, that is, they give infinite answers. The divergences which are significant are the "ultraviolet" (UV) ones. An ultraviolet divergence can be described as one which comes from

- the region in the integral where all particles in the loop have large energies and momenta.

- very short wavelengths and high frequencies fluctuations of the fields, in the path integral for the field.

- Very short proper-time between particle emission and absorption, if the loop is thought of as a sum over particle paths.

So these divergences are short-distance, short-time phenomena.

There are exactly three one-loop divergent loop diagrams in quantum electrodynamics:[2]

- a photon creates a virtual electron-positron pair which then annihilate, this is a vacuum polarization diagram.

- an electron which quickly emits and reabsorbs a virtual photon, called a self-energy.

- An electron emits a photon, emits a second photon, and reabsorbs the first. This process is shown in figure 2, and it is called a vertex renormalization. The Feynman diagram for this is also called a penguin diagram due to its shape remotely resembling a penguin (with the initial and final state electrons as the arms and legs, the second photon as the body and the first looping photon as the head).

The three divergences correspond to the three parameters in the theory:

- the field normalization Z.

- the mass of the electron.

- the charge of the electron.

A second class of divergence, called an infrared divergence, is due to massless particles, like the photon. Every process involving charged particles emits infinitely many coherent photons of infinite wavelength, and the amplitude for emitting any finite number of photons is zero. For photons, these divergences are well understood. For example, at the 1-loop order, the vertex function has both ultraviolet and infrared divergences. In contrast to the ultraviolet divergence, the infrared divergence does not require the renormalization of a parameter in the theory. The infrared divergence of the vertex diagram is removed by including a diagram similar to the vertex diagram with the following important difference: the photon connecting the two legs of the electron is cut and replaced by two on shell (i.e. real) photons whose wavelengths tend to infinity; this diagram is equivalent to the bremsstrahlung process. This additional diagram must be included because there is no physical way to distinguish a zero-energy photon flowing through a loop as in the vertex diagram and zero-energy photons emitted through bremsstrahlung. From a mathematical point of view the IR divergences can be regularized by assuming fractional differentiation with respect to a parameter, for example is well defined at p = a but is UV divergent, if we take the 3/2-th fractional derivative with respect to we obtain the IR divergence , so we can cure IR divergences by turning them into UV divergences.Template:Clarify

A loop divergence

The diagram in Figure 2 shows one of the several one-loop contributions to electron-electron scattering in QED. The electron on the left side of the diagram, represented by the solid line, starts out with four-momentum and ends up with four-momentum . It emits a virtual photon carrying to transfer energy and momentum to the other electron. But in this diagram, before that happens, it emits another virtual photon carrying four-momentum , and it reabsorbs this one after emitting the other virtual photon. Energy and momentum conservation do not determine the four-momentum uniquely, so all possibilities contribute equally and we must integrate.

This diagram's amplitude ends up with, among other things, a factor from the loop of

The various factors in this expression are gamma matrices as in the covariant formulation of the Dirac equation; they have to do with the spin of the electron. The factors of are the electric coupling constant, while the provide a heuristic definition of the contour of integration around the poles in the space of momenta. The important part for our purposes is the dependency on of the three big factors in the integrand, which are from the propagators of the two electron lines and the photon line in the loop.

This has a piece with two powers of on top that dominates at large values of (Pokorski 1987, p. 122):

This integral is divergent, and infinite unless we cut it off at finite energy and momentum in some way.

Similar loop divergences occur in other quantum field theories.

Renormalized and bare quantities

Before you choose any particular company it is vital to understand in full how the different plans can vary. There is no other better method than to create a message board so that people can relax and "chill" on your website and check out your articles more. You should read the HostGator review, even before registering with a web hosting company. but Hostgator in addition considers the surroundings. You can even use a Hostgator reseller coupon for unlimited web hosting at HostGator! Most of individuals by no means go for yearly subscription and choose month to month subscription. Several users commented that this was the deciding factor in picking HostGator but in any case there is a 45 day Money Back Guarantee and there is no contract so you can cancel at any time. GatorBill is able to send you an email notice about the new invoice. In certain cases a dedicated server can offer less overhead and a bigger revenue in investments. With the plan come a Free Billing Executive, Free sellers account and Free Hosting Templates.

This is one of the only things that require you to spend a little money to make money. Just go make an account, get a paypal account, and start selling. To go one step beyond just affiliating products and services is to create your own and sell it through your blog. Not great if you really enjoy trying out all the themes. Talking in real time having a real person causes it to be personal helping me personally to sort out how to proceed. The first step I took was search for a discount code, as I did with HostGator. Using a HostGator coupon is a beneficial method to get started. As long as the necessities are able to preserve the horizontal functionality of your site, you would pretty much be fine.

The solution was to realize that the quantities initially appearing in the theory's formulae (such as the formula for the Lagrangian), representing such things as the electron's electric charge and mass, as well as the normalizations of the quantum fields themselves, did not actually correspond to the physical constants measured in the laboratory. As written, they were bare quantities that did not take into account the contribution of virtual-particle loop effects to the physical constants themselves. Among other things, these effects would include the quantum counterpart of the electromagnetic back-reaction that so vexed classical theorists of electromagnetism. In general, these effects would be just as divergent as the amplitudes under study in the first place; so finite measured quantities would in general imply divergent bare quantities.

In order to make contact with reality, then, the formulae would have to be rewritten in terms of measurable, renormalized quantities. The charge of the electron, say, would be defined in terms of a quantity measured at a specific kinematic renormalization point or subtraction point (which will generally have a characteristic energy, called the renormalization scale or simply the energy scale). The parts of the Lagrangian left over, involving the remaining portions of the bare quantities, could then be reinterpreted as counterterms, involved in divergent diagrams exactly canceling out the troublesome divergences for other diagrams.

Renormalization in QED

For example, in the Lagrangian of QED

the fields and coupling constant are really bare quantities, hence the subscript above. Conventionally the bare quantities are written so that the corresponding Lagrangian terms are multiples of the renormalized ones:

(Gauge invariance, via a Ward–Takahashi identity, turns out to imply that we can renormalize the two terms of the covariant derivative piece together (Pokorski 1987, p. 115), which is what happened to ; it is the same as .)

A term in this Lagrangian, for example, the electron-photon interaction pictured in Figure 1, can then be written

The physical constant , the electron's charge, can then be defined in terms of some specific experiment; we set the renormalization scale equal to the energy characteristic of this experiment, and the first term gives the interaction we see in the laboratory (up to small, finite corrections from loop diagrams, providing such exotica as the high-order corrections to the magnetic moment). The rest is the counterterm. If the theory is renormalizable (see below for more on this), as it is in QED, the divergent parts of loop diagrams can all be decomposed into pieces with three or fewer legs, with an algebraic form that can be canceled out by the second term (or by the similar counterterms that come from and ).

The diagram with the counterterm's interaction vertex placed as in Figure 3 cancels out the divergence from the loop in Figure 2.

The splitting of the "bare terms" into the original terms and counterterms came before the renormalization group insights[3] due to Kenneth Wilson. According to such renormalization group insights, this splitting is unnatural and actually unphysical.

Running couplings

To minimize the contribution of loop diagrams to a given calculation (and therefore make it easier to extract results), one chooses a renormalization point close to the energies and momenta actually exchanged in the interaction. However, the renormalization point is not itself a physical quantity: the physical predictions of the theory, calculated to all orders, should in principle be independent of the choice of renormalization point, as long as it is within the domain of application of the theory. Changes in renormalization scale will simply affect how much of a result comes from Feynman diagrams without loops, and how much comes from the leftover finite parts of loop diagrams. One can exploit this fact to calculate the effective variation of physical constants with changes in scale. This variation is encoded by beta-functions, and the general theory of this kind of scale-dependence is known as the renormalization group.

Colloquially, particle physicists often speak of certain physical "constants" as varying with the energy of an interaction, though in fact it is the renormalization scale that is the independent quantity. This running does, however, provide a convenient means of describing changes in the behavior of a field theory under changes in the energies involved in an interaction. For example, since the coupling in quantum chromodynamics becomes small at large energy scales, the theory behaves more like a free theory as the energy exchanged in an interaction becomes large, a phenomenon known as asymptotic freedom. Choosing an increasing energy scale and using the renormalization group makes this clear from simple Feynman diagrams; were this not done, the prediction would be the same, but would arise from complicated high-order cancellations.

To eliminate the divergence, simply change lower limit of integral into and :

Regularization

Before you choose any particular company it is vital to understand in full how the different plans can vary. There is no other better method than to create a message board so that people can relax and "chill" on your website and check out your articles more. You should read the HostGator review, even before registering with a web hosting company. but Hostgator in addition considers the surroundings. You can even use a Hostgator reseller coupon for unlimited web hosting at HostGator! Most of individuals by no means go for yearly subscription and choose month to month subscription. Several users commented that this was the deciding factor in picking HostGator but in any case there is a 45 day Money Back Guarantee and there is no contract so you can cancel at any time. GatorBill is able to send you an email notice about the new invoice. In certain cases a dedicated server can offer less overhead and a bigger revenue in investments. With the plan come a Free Billing Executive, Free sellers account and Free Hosting Templates.

This is one of the only things that require you to spend a little money to make money. Just go make an account, get a paypal account, and start selling. To go one step beyond just affiliating products and services is to create your own and sell it through your blog. Not great if you really enjoy trying out all the themes. Talking in real time having a real person causes it to be personal helping me personally to sort out how to proceed. The first step I took was search for a discount code, as I did with HostGator. Using a HostGator coupon is a beneficial method to get started. As long as the necessities are able to preserve the horizontal functionality of your site, you would pretty much be fine.

Since the quantity is ill-defined, in order to make this notion of canceling divergences precise, the divergences first have to be tamed mathematically using the theory of limits, in a process known as regularization.

An essentially arbitrary modification to the loop integrands, or regulator, can make them drop off faster at high energies and momenta, in such a manner that the integrals converge. A regulator has a characteristic energy scale known as the cutoff; taking this cutoff to infinity (or, equivalently, the corresponding length/time scale to zero) recovers the original integrals.

With the regulator in place, and a finite value for the cutoff, divergent terms in the integrals then turn into finite but cutoff-dependent terms. After canceling out these terms with the contributions from cutoff-dependent counterterms, the cutoff is taken to infinity and finite physical results recovered. If physics on scales we can measure is independent of what happens at the very shortest distance and time scales, then it should be possible to get cutoff-independent results for calculations.

Many different types of regulator are used in quantum field theory calculations, each with its advantages and disadvantages. One of the most popular in modern use is dimensional regularization, invented by Gerardus 't Hooft and Martinus J. G. Veltman,[4] which tames the integrals by carrying them into a space with a fictitious fractional number of dimensions. Another is Pauli–Villars regularization, which adds fictitious particles to the theory with very large masses, such that loop integrands involving the massive particles cancel out the existing loops at large momenta.

Yet another regularization scheme is the Lattice regularization, introduced by Kenneth Wilson, which pretends that our space-time is constructed by hyper-cubical lattice with fixed grid size. This size is a natural cutoff for the maximal momentum that a particle could possess when propagating on the lattice. And after doing calculation on several lattices with different grid size, the physical result is extrapolated to grid size 0, or our natural universe. This presupposes the existence of a scaling limit.

A rigorous mathematical approach to renormalization theory is the so-called causal perturbation theory, where ultraviolet divergences are avoided from the start in calculations by performing well-defined mathematical operations only within the framework of distribution theory. The disadvantage of the method is the fact that the approach is quite technical and requires a high level of mathematical knowledge.

Zeta function regularization

Julian Schwinger discovered a relationshipPotter or Ceramic Artist Truman Bedell from Rexton, has interests which include ceramics, best property developers in singapore developers in singapore and scrabble. Was especially enthused after visiting Alejandro de Humboldt National Park. between zeta function regularization and renormalization, using the asymptotic relation:

as the regulator . Based on this, he considered using the values of to get finite results. Although he reached inconsistent results, an improved formula studied by Hartle, J. Garcia, and based on the works by E. Elizalde includes the technique of the zeta regularization algorithm

where the B's are the Bernoulli numbers and

So every can be written as a linear combination of

Or simply using Abel–Plana formula we have for every divergent integral:

valid when m > 0, Here the zeta function is Hurwitz zeta function and Beta is a positive real number.

The "geometric" analogy is given by, (if we use rectangle method) to evaluate the integral so:

Using Hurwitz zeta regularization plus the rectangle method with step h (not to be confused with Planck's constant).

The logarithmic divergent integral has the regularization

For multi-loop integrals that will depend on several variables we can make a change of variables to polar coordinates and then replace the integral over the angles by a sum so we have only a divergent integral, that will depend on the modulus and then we can apply the zeta regularization algorithm, the main idea for multi-loop integrals is to replace the factor after a change to hyperspherical coordinates so the UV overlapping divergences are encoded in variable r. In order to regularize these integrals one needs a regulator, for the case of multi-loop integrals, these regulator can be taken as so the multi-loop integral will converge for big enough 's' using the Zeta regularization we can analytic continue the variable 's' to the physical limit where s=0 and then regularize any UV integral, by replacing a divergent integral by a linear combination of divergent series, which can be regularized in terms of the negative values of the Riemann zeta function

Attitudes and interpretation

The early formulators of QED and other quantum field theories were, as a rule, dissatisfied with this state of affairs. It seemed illegitimate to do something tantamount to subtracting infinities from infinities to get finite answers.

Freeman Dyson has shown that these infinities are of a basic nature and cannot be eliminated by any formal mathematical procedures, such as the renormalization method.[5][6]

Dirac's criticism was the most persistent.[7] As late as 1975, he was saying:[8]

- Most physicists are very satisfied with the situation. They say: 'Quantum electrodynamics is a good theory and we do not have to worry about it any more.' I must say that I am very dissatisfied with the situation, because this so-called 'good theory' does involve neglecting infinities which appear in its equations, neglecting them in an arbitrary way. This is just not sensible mathematics. Sensible mathematics involves neglecting a quantity when it is small - not neglecting it just because it is infinitely great and you do not want it!

Another important critic was Feynman. Despite his crucial role in the development of quantum electrodynamics, he wrote the following in 1985:[9]

- The shell game that we play ... is technically called 'renormalization'. But no matter how clever the word, it is still what I would call a dippy process! Having to resort to such hocus-pocus has prevented us from proving that the theory of quantum electrodynamics is mathematically self-consistent. It's surprising that the theory still hasn't been proved self-consistent one way or the other by now; I suspect that renormalization is not mathematically legitimate.

While Dirac's criticism was based on the procedure of renormalization itself, Feynman's criticism was very different. Feynman was concerned that all field theories known in the 1960s had the property that the interactions become infinitely strong at short enough distance scales. This property, called a Landau pole, made it plausible that quantum field theories were all inconsistent. In 1974, Gross, Politzer and Wilczek showed that another quantum field theory, quantum chromodynamics, does not have a Landau pole. Feynman, along with most others, accepted that QCD was a fully consistent theory.Potter or Ceramic Artist Truman Bedell from Rexton, has interests which include ceramics, best property developers in singapore developers in singapore and scrabble. Was especially enthused after visiting Alejandro de Humboldt National Park.

The general unease was almost universal in texts up to the 1970s and 1980s. Beginning in the 1970s, however, inspired by work on the renormalization group and effective field theory, and despite the fact that Dirac and various others—all of whom belonged to the older generation—never withdrew their criticisms, attitudes began to change, especially among younger theorists. Kenneth G. Wilson and others demonstrated that the renormalization group is useful in statistical field theory applied to condensed matter physics, where it provides important insights into the behavior of phase transitions. In condensed matter physics, a real short-distance regulator exists: matter ceases to be continuous on the scale of atoms. Short-distance divergences in condensed matter physics do not present a philosophical problem, since the field theory is only an effective, smoothed-out representation of the behavior of matter anyway; there are no infinities since the cutoff is actually always finite, and it makes perfect sense that the bare quantities are cutoff-dependent.

If QFT holds all the way down past the Planck length (where it might yield to string theory, causal set theory or something different), then there may be no real problem with short-distance divergences in particle physics either; all field theories could simply be effective field theories. In a sense, this approach echoes the older attitude that the divergences in QFT speak of human ignorance about the workings of nature, but also acknowledges that this ignorance can be quantified and that the resulting effective theories remain useful.

Be that as it may Salam's remark [10] in 1972 seems still relevant