Holonomic basis: Difference between revisions

en>Katharineamy added Category:Differential geometry; removed {{uncategorized}} using HotCat |

en>Maschen link to Ricci calculus |

||

| Line 1: | Line 1: | ||

'''Parallel Processing''' in [[digital signal processing]] (DSP) is a technique duplicating function units to operate different tasks (signals) simultaneously.<ref>K.K. Parhi, VLSI Digital Signal Processing Systems: Design and Implementation, John Wiley, 1999</ref> Accordingly, we can perform the same processing for different [[Signal_(electrical_engineering)|signals]] on the corresponding duplicated function units. Further, due to the features of [[parallel processing]], the parallel DSP design often contains multiple outputs, resulting in higher throughput than not parallel. | |||

==Conceptual Example== | |||

Consider a function unit (''F''<sub>0</sub>) and three tasks (''T''<sub>0</sub>, ''T''<sub>1</sub> and ''T''<sub>2</sub>). The required time for the function unit ''F''<sub>0</sub> to process those tasks is ''t''<sub>0</sub>,''t''<sub>1</sub> and ''t''<sub>2</sub> respectively. Then, if we operate these three tasks in a sequential order, the required time to complete them is ''t''<sub>0</sub>+''t''<sub>1</sub>+''t''<sub>2</sub>. | |||

<br> [[File:Non-parallel.png|center|400px]] | |||

However, if we duplicate the function unit to another two copies (''F''), the aggregate time is reduced to max(''t''<sub>0</sub>,''t''<sub>1</sub>,''t''<sub>2</sub>), which is smaller than in a sequential order. | |||

<br> [[File:Parallel-tasks.png|center|350px]] | |||

==Parallel Processing Versus Pipelining== | |||

Mechanism: | |||

*Parallel: duplicated function units working in parallel | |||

**Each task is processed entirely by a different function unit. | |||

*[[Pipelining]]: different function units working in parallel | |||

**Each task is split into a sequence of sub-tasks, which are handled by specialized and different function units. | |||

Objective: | |||

*Pipelining leads to a reduction in the critical path, which can increase the sample speed or reduce power consumption at the same speed. | |||

*Parallel processing techniques require multiple outputs, which are computed in parallel in a clock period. Therefore, the effective sample speed is increased by the level of parallelism. | |||

Consider a condition that we are able to apply both parallel processing and pipelining techniques, it is better to choose parallel processing techniques with the following reasons | |||

*Pipelining usually causes I/O bottlenecks | |||

*Parallel processing is also utilized for reduction of power consumption while using slow clocks | |||

*The hybrid method of pipelining and parallel processing further increase the speed of the architecture | |||

==Parallel FIR Filters== | |||

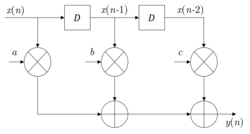

Consider a 3-tap FIR filter:<ref>Slides for VLSI Digital Signal Processing Systems: Design and Implementation John Wiley & Sons, 1999 (ISBN Number: 0-471-24186-5): http://www.ece.umn.edu/users/parhi/slides.html</ref> | |||

<math>y(n)=ax(n)+bx(n-1)+cx(n-2)</math> | |||

which is shown in the following figure. | |||

Assume the calculation time for multiplication units is T<sub>m</sub> and T<sub>a</sub> for add units. The sample period is given by | |||

<math>{T_{sample} \ge T_m + 2T_a }</math> | |||

[[File:Pipelined FIR filters.png|center|250px]] | |||

By parallelizing it, the resultant architecture is shown as follows. The sample rate now becomes | |||

<br> <math>{T_{sample} \ge \frac{T_{clock}}{N} = \frac{T_m + 2T_a}{3} }</math> | |||

where N represents the number of copies. | |||

Please note that, in a parallel system, <math>T_{sample} \ne T_{clock}</math> while <math>T_{sample}=T_{clock}</math> holds in a pipelined system. | |||

[[File:Parallel FIR.png|center|400px]] | |||

==Parallel 1st-order IIR Filters== | |||

Consider the transfer function of a 1st–order IIR filter formulated as | |||

<br> <math>H(z)=\frac{z^{-1}}{1-a*z^{-1}}</math> | |||

where |''a''|≤1 for stability, and such filter has only one pole located at ''z''=''a''; | |||

The corresponding recursive representation is | |||

<br> <math> y(n+1) = ay(n) + u(n)</math> | |||

Consider the design of a 4-parallel architecture (''N''=4). In such parallel system, each delay element means a block delay and the clock period is four times the sample period. | |||

<br>Therefore, by iterating the recursion with ''n''=4''k'', we have | |||

<br> <math> y(n+4) = a^{4}y(n) + a^{3}u(n) + a^{2}u(n+1) + au(n+2) + u(n+3)</math> | |||

<math> \rightarrow y(4k+4) = a^{4}y(4k) + a^{3}u(4k) + a^{2}u(4k+1) + au(4k+2) + u(4k+3)</math> | |||

The corresponding architecture is shown as follows. | |||

[[File:Parallel IIR.png|center|400px]] | |||

The resultant parallel design has the following properties. | |||

* The pole of the original filter is at ''z''=''a'' while the pole for the parallel system is at ''z''=''a''<sup>4</sup> which is closer to the origin. | |||

* The pole movement improves the robustness of the system to the round-off noise. | |||

* Hardware complexity of this architecture: ''N''*''N'' multiply-add operations. | |||

<br> Please note that the square increase in hardware complexity can be reduced by exploiting the concurrency and the incremental computation to avoid repeated computing. | |||

==Parallel Processing for Low Power== | |||

Another advantage for the parallel processing techniques is that it can reduce the power consumption of a system by reducing the supply voltage. | |||

<br> Consider the following power consumption in a normal CMOS circuit. | |||

<br> <math>P_{seq}=C_{total}*V_0^{2}*f</math> | |||

where the ''C<sub>total</sub>'' represents the total capacitance of the CMOS circuit. | |||

<br> For a parallel version, the charging capacitance remains the same but the total capacitance increases by ''N'' times. | |||

<br> In order to maintain the same sample rate, the clock period of the ''N''-parallel circuit increases to ''N'' times the propagation delay of the original circuit. | |||

<br> It makes the charging time prolongs ''N'' times. The supply voltage can be reduced to ''β''V<sub>0</sub>. | |||

Therefore, the power consumption of the N-parallel system can be formulated as | |||

<br> <math>P_{para}=(NC_{total})*(\beta V_0^{2})*\frac{f}{N}=\beta^2*P_{seq} </math> | |||

where ''β'' can be computed by | |||

<math> N(\beta V_{0}-V_{t})^{2} = \beta(V_{0}-V_{t})^{2} </math> | |||

==References== | |||

<references/> | |||

[[Category:Digital signal processing]] | |||

Latest revision as of 22:22, 3 April 2013

Parallel Processing in digital signal processing (DSP) is a technique duplicating function units to operate different tasks (signals) simultaneously.[1] Accordingly, we can perform the same processing for different signals on the corresponding duplicated function units. Further, due to the features of parallel processing, the parallel DSP design often contains multiple outputs, resulting in higher throughput than not parallel.

Conceptual Example

Consider a function unit (F0) and three tasks (T0, T1 and T2). The required time for the function unit F0 to process those tasks is t0,t1 and t2 respectively. Then, if we operate these three tasks in a sequential order, the required time to complete them is t0+t1+t2.

However, if we duplicate the function unit to another two copies (F), the aggregate time is reduced to max(t0,t1,t2), which is smaller than in a sequential order.

Parallel Processing Versus Pipelining

Mechanism:

- Parallel: duplicated function units working in parallel

- Each task is processed entirely by a different function unit.

- Pipelining: different function units working in parallel

- Each task is split into a sequence of sub-tasks, which are handled by specialized and different function units.

Objective:

- Pipelining leads to a reduction in the critical path, which can increase the sample speed or reduce power consumption at the same speed.

- Parallel processing techniques require multiple outputs, which are computed in parallel in a clock period. Therefore, the effective sample speed is increased by the level of parallelism.

Consider a condition that we are able to apply both parallel processing and pipelining techniques, it is better to choose parallel processing techniques with the following reasons

- Pipelining usually causes I/O bottlenecks

- Parallel processing is also utilized for reduction of power consumption while using slow clocks

- The hybrid method of pipelining and parallel processing further increase the speed of the architecture

Parallel FIR Filters

Consider a 3-tap FIR filter:[2]

which is shown in the following figure.

Assume the calculation time for multiplication units is Tm and Ta for add units. The sample period is given by

By parallelizing it, the resultant architecture is shown as follows. The sample rate now becomes

where N represents the number of copies.

Please note that, in a parallel system, while holds in a pipelined system.

Parallel 1st-order IIR Filters

Consider the transfer function of a 1st–order IIR filter formulated as

where |a|≤1 for stability, and such filter has only one pole located at z=a;

The corresponding recursive representation is

Consider the design of a 4-parallel architecture (N=4). In such parallel system, each delay element means a block delay and the clock period is four times the sample period.

Therefore, by iterating the recursion with n=4k, we have

The corresponding architecture is shown as follows.

The resultant parallel design has the following properties.

- The pole of the original filter is at z=a while the pole for the parallel system is at z=a4 which is closer to the origin.

- The pole movement improves the robustness of the system to the round-off noise.

- Hardware complexity of this architecture: N*N multiply-add operations.

Please note that the square increase in hardware complexity can be reduced by exploiting the concurrency and the incremental computation to avoid repeated computing.

Parallel Processing for Low Power

Another advantage for the parallel processing techniques is that it can reduce the power consumption of a system by reducing the supply voltage.

Consider the following power consumption in a normal CMOS circuit.

where the Ctotal represents the total capacitance of the CMOS circuit.

For a parallel version, the charging capacitance remains the same but the total capacitance increases by N times.

In order to maintain the same sample rate, the clock period of the N-parallel circuit increases to N times the propagation delay of the original circuit.

It makes the charging time prolongs N times. The supply voltage can be reduced to βV0.

Therefore, the power consumption of the N-parallel system can be formulated as

where β can be computed by

References

- ↑ K.K. Parhi, VLSI Digital Signal Processing Systems: Design and Implementation, John Wiley, 1999

- ↑ Slides for VLSI Digital Signal Processing Systems: Design and Implementation John Wiley & Sons, 1999 (ISBN Number: 0-471-24186-5): http://www.ece.umn.edu/users/parhi/slides.html