Kriging: Difference between revisions

en>Alfie66 m Daniel => Danie; removed redundant WLs |

en>Gareth Jones →Applications: use template for reference |

||

| Line 1: | Line 1: | ||

In [[data mining]], '''hierarchical clustering''' is a method of [[cluster analysis]] which seeks to build a [[hierarchy]] of clusters. Strategies for hierarchical clustering generally fall into two types: {{Cn|date=December 2013}} | |||

*'''Agglomerative''': This is a "bottom up" approach: each observation starts in its own cluster, and pairs of clusters are merged as one moves up the hierarchy. | |||

*'''Divisive''': This is a "top down" approach: all observations start in one cluster, and splits are performed recursively as one moves down the hierarchy. | |||

In general, the merges and splits are determined in a [[greedy algorithm|greedy]] manner. The results of hierarchical clustering are usually presented in a [[dendrogram]]. | |||

In the general case, the complexity of agglomerative clustering is <math>\mathcal{O}(n^3)</math>, which makes them too slow for large data sets. Divisive clustering with an exhaustive search is <math>\mathcal{O}(2^n)</math>, which is even worse. However, for some special cases, optimal efficient agglomerative methods (of complexity <math>\mathcal{O}(n^2)</math>) are known: SLINK<ref name="SLINK">{{cite journal | author=R. Sibson | title=SLINK: an optimally efficient algorithm for the single-link cluster method | journal=The Computer Journal | volume=16 | issue=1 | pages=30–34 | year=1973 | publisher=British Computer Society | url=http://www.cs.gsu.edu/~wkim/index_files/papers/sibson.pdf | doi=10.1093/comjnl/16.1.30}}</ref> for single-linkage and CLINK<ref>{{cite journal | author=D. Defays | title=An efficient algorithm for a complete link method | journal=The Computer Journal | volume=20 | issue=4 | pages=364–366 | year=1977 | publisher=British Computer Society | url=http://comjnl.oxfordjournals.org/content/20/4/364.abstract | doi=10.1093/comjnl/20.4.364}}</ref> for complete-linkage clustering. | |||

== Cluster dissimilarity == | |||

In order to decide which clusters should be combined (for agglomerative), or where a cluster should be split (for divisive), a measure of dissimilarity between sets of observations is required. In most methods of hierarchical clustering, this is achieved by use of an appropriate [[metric (mathematics)|metric]] (a measure of [[distance]] between pairs of observations), and a linkage criterion which specifies the dissimilarity of sets as a function of the pairwise distances of observations in the sets. | |||

=== Metric === | |||

{{See|metric (mathematics)}} | |||

The choice of an appropriate metric will influence the shape of the clusters, as some elements may be close to one another according to one distance and farther away according to another. For example, in a 2-dimensional space, the distance between the point (1,0) and the origin (0,0) is always 1 according to the usual norms, but the distance between the point (1,1) and the origin (0,0) can be 2, <math>\scriptstyle\sqrt{2}</math> or 1 under Manhattan distance, Euclidean distance or maximum distance respectively. | |||

Some commonly used metrics for hierarchical clustering are:<ref>{{cite web | title=The DISTANCE Procedure: Proximity Measures | url=http://support.sas.com/documentation/cdl/en/statug/63033/HTML/default/statug_distance_sect016.htm | work=SAS/STAT 9.2 Users Guide | publisher= [[SAS Institute]] | date= | accessdate=2009-04-26}}</ref> | |||

{|class="wikitable" | |||

! Names | |||

! Formula | |||

|- | |||

| [[Euclidean distance]] | |||

| <math> \|a-b \|_2 = \sqrt{\sum_i (a_i-b_i)^2} </math> | |||

|- | |||

| squared Euclidean distance | |||

| <math> \|a-b \|_2^2 = \sum_i (a_i-b_i)^2 </math> | |||

|- | |||

| [[Manhattan distance]] | |||

| <math> \|a-b \|_1 = \sum_i |a_i-b_i| </math> | |||

|- | |||

| [[Uniform norm|maximum distance]] | |||

| <math> \|a-b \|_\infty = \max_i |a_i-b_i| </math> | |||

|- | |||

| [[Mahalanobis distance]] | |||

| <math> \sqrt{(a-b)^{\top}S^{-1}(a-b)} </math> where ''S'' is the [[covariance matrix]] | |||

|- | |||

| [[cosine similarity]] | |||

| <math> \frac{a \cdot b}{\|a\| \|b\|} </math> | |||

|- | |||

|} | |||

For text or other non-numeric data, metrics such as the [[Hamming distance]] or [[Levenshtein distance]] are often used. | |||

A review of cluster analysis in health psychology research found that the most common distance measure in published studies in that research area is the Euclidean distance or the squared Euclidean distance.{{Citation needed|date=April 2009}} | |||

=== Linkage criteria === | |||

The linkage criterion determines the distance between sets of observations as a function of the pairwise distances between observations. | |||

Some commonly used linkage criteria between two sets of observations ''A'' and ''B'' are:<ref>{{cite web | title=The CLUSTER Procedure: Clustering Methods | url=http://support.sas.com/documentation/cdl/en/statug/63033/HTML/default/statug_cluster_sect012.htm | work=SAS/STAT 9.2 Users Guide | publisher= [[SAS Institute]] | date= | accessdate=2009-04-26}}</ref><ref>Székely, G. J. and Rizzo, M. L. (2005) Hierarchical clustering via Joint Between-Within Distances: Extending Ward's Minimum Variance Method, Journal of Classification 22, 151-183.</ref> | |||

{|class="wikitable" | |||

! Names | |||

! Formula | |||

|- | |||

| Maximum or [[complete linkage clustering]] | |||

| <math> \max \, \{\, d(a,b) : a \in A,\, b \in B \,\}. </math> | |||

|- | |||

| Minimum or [[single-linkage clustering]] | |||

| <math> \min \, \{\, d(a,b) : a \in A,\, b \in B \,\}. </math> | |||

|- | |||

| Mean or average linkage clustering, or [[UPGMA]] | |||

| <math> \frac{1}{|A| |B|} \sum_{a \in A }\sum_{ b \in B} d(a,b). </math> | |||

|- | |||

| [[Energy distance|Minimum energy clustering]] | |||

| <math> \frac {2}{nm}\sum_{i,j=1}^{n,m} \|a_i- b_j\|_2 - \frac {1}{n^2}\sum_{i,j=1}^{n} \|a_i-a_j\|_2 - \frac{1}{m^2}\sum_{i,j=1}^{m} \|b_i-b_j\|_2 </math> | |||

|} | |||

where ''d'' is the chosen metric. Other linkage criteria include: | |||

* The sum of all intra-cluster variance. | |||

* The decrease in variance for the cluster being merged ([[Ward's method|Ward's criterion]]).<ref name="wards method">{{cite journal | |||

|doi=10.2307/2282967 | |||

|last=Ward |first=Joe H. | |||

|title=Hierarchical Grouping to Optimize an Objective Function | |||

|journal=Journal of the American Statistical Association | |||

|volume=58 |issue=301 |year=1963 |pages=236–244 | |||

|mr=0148188 | |||

|jstor=2282967 | |||

}}</ref> | |||

* The probability that candidate clusters spawn from the same distribution function (V-linkage). | |||

* The product of in-degree and out-degree on a k-nearest-neighbor graph (graph degree linkage).<ref>Zhang, et al. "Graph degree linkage: Agglomerative clustering on a directed graph." 12th European Conference on Computer Vision, Florence, Italy, October 7-13, 2012. http://arxiv.org/abs/1208.5092</ref> | |||

* The increment of some cluster descriptor (i.e., a quantity defined for measuring the quality of a cluster) after merging two clusters.<ref>Zhang, et al. "Agglomerative clustering via maximum incremental path integral." Pattern Recognition (2013).</ref> <ref>Zhao, and Tang. "Cyclizing clusters via zeta function of a graph."Advances in Neural Information Processing Systems. 2008.</ref> <ref>Ma, et al. "Segmentation of multivariate mixed data via lossy data coding and compression." IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(9) (2007): 1546-1562.</ref> | |||

== Discussion == | |||

Hierarchical clustering has the distinct advantage that any valid measure of distance can be used. In fact, the observations themselves are not required: all that is used is a matrix of distances. | |||

== Example for Agglomerative Clustering == | |||

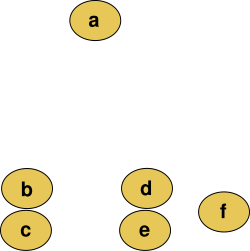

For example, suppose this data is to be clustered, and the [[Euclidean distance]] is the [[Metric (mathematics)|distance metric]]. | |||

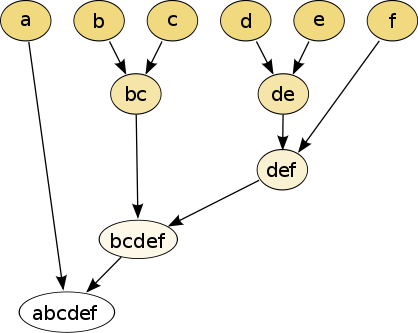

Cutting the tree at a given height will give a partitioning clustering at a selected precision. In this example, cutting after the second row of the dendrogram will yield clusters {a} {b c} {d e} {f}. Cutting after the third row will yield clusters {a} {b c} {d e f}, which is a coarser clustering, with a smaller number of larger clusters. | |||

[[Image:Clusters.svg|frame|none|Raw data]] | |||

The hierarchical clustering [[dendrogram]] would be as such: | |||

[[Image:Hierarchical clustering simple diagram.svg|frame|none|Traditional representation]] | |||

This method builds the hierarchy from the individual elements by progressively merging clusters. In our example, we have six elements {a} {b} {c} {d} {e} and {f}. The first step is to determine which elements to merge in a cluster. Usually, we want to take the two closest elements, according to the chosen distance. | |||

Optionally, one can also construct a [[distance matrix]] at this stage, where the number in the ''i''-th row ''j''-th column is the distance between the ''i''-th and ''j''-th elements. Then, as clustering progresses, rows and columns are merged as the clusters are merged and the distances updated. This is a common way to implement this type of clustering, and has the benefit of caching distances between clusters. A simple agglomerative clustering algorithm is described in the [[single-linkage clustering]] page; it can easily be adapted to different types of linkage (see below). | |||

Suppose we have merged the two closest elements ''b'' and ''c'', we now have the following clusters {''a''}, {''b'', ''c''}, {''d''}, {''e''} and {''f''}, and want to merge them further. To do that, we need to take the distance between {a} and {b c}, and therefore define the distance between two clusters. | |||

Usually the distance between two clusters <math>\mathcal{A}</math> and <math>\mathcal{B}</math> is one of the following: | |||

* The maximum distance between elements of each cluster (also called [[complete-linkage clustering]]): | |||

::<math> \max \{\, d(x,y) : x \in \mathcal{A},\, y \in \mathcal{B}\,\}. </math> | |||

* The minimum distance between elements of each cluster (also called [[single-linkage clustering]]): | |||

::<math> \min \{\, d(x,y) : x \in \mathcal{A},\, y \in \mathcal{B} \,\}. </math> | |||

* The mean distance between elements of each cluster (also called average linkage clustering, used e.g. in [[UPGMA]]): | |||

::<math> {1 \over {|\mathcal{A}|\cdot|\mathcal{B}|}}\sum_{x \in \mathcal{A}}\sum_{ y \in \mathcal{B}} d(x,y). </math> | |||

* The sum of all intra-cluster variance. | |||

* The increase in variance for the cluster being merged ([[Ward's method]]<ref name="<ref name="wards method"/>) | |||

* The probability that candidate clusters spawn from the same distribution function (V-linkage). | |||

Each agglomeration occurs at a greater distance between clusters than the previous agglomeration, and one can decide to stop clustering either when the clusters are too far apart to be merged (distance criterion) or when there is a sufficiently small number of clusters (number criterion). | |||

== Software == | |||

=== Open Source Frameworks === | |||

* [http://bonsai.hgc.jp/~mdehoon/software/cluster/ Cluster 3.0] provides a nice [[Graphical User Interface]] to access to different clustering routines and is available for Windows, Mac OS X, Linux, Unix. | |||

* [[Environment for DeveLoping KDD-Applications Supported by Index-Structures|ELKI]] includes multiple hierarchical clustering algorithms, various linkage strategies and also includes the efficient SLINK<ref name="SLINK" /> algorithm, flexible cluster extraction from dendrograms and various other [[cluster analysis]] algorithms. | |||

* [[GNU Octave|Octave]], the [[GNU]] analog to [[MATLAB]] implements hierarchical clustering in [http://octave.sourceforge.net/statistics/function/linkage.html linkage function] | |||

* [[Orange (software)|Orange]], a free data mining software suite, module [http://www.ailab.si/orange/doc/modules/orngClustering.htm orngClustering] for scripting in [[Python (programming language)|Python]], or cluster analysis through visual programming. | |||

* [[R (programming language)|R]] has several functions for hierarchical clustering: see [http://cran.r-project.org/web/views/Cluster.html CRAN Task View: Cluster Analysis & Finite Mixture Models] for more information. | |||

* [[scikit-learn]] implements a hierarchical clustering based on the [[Ward's method|Ward algorithm]] only. | |||

* [[Weka (machine learning)|Weka]] includes hierarchical cluster analysis. | |||

=== Standalone implementations === | |||

* [[CrimeStat]] implements two hierarchical clustering routines, a nearest neighbor (Nnh) and a risk-adjusted(Rnnh). | |||

* [http://code.google.com/p/figue/ figue] is a [[JavaScript]] package that implements some agglomerative clustering functions (single-linkage, complete-linkage, average-linkage) and functions to visualize clustering output (e.g. dendrograms). | |||

* [http://code.google.com/p/scipy-cluster/ hcluster] is a [[Python (programming language)|Python]] implementation, based on [[NumPy]], which supports hierarchical clustering and plotting. | |||

* [http://www.semanticsearchart.com/researchHAC.html Hierarchical Agglomerative Clustering] implemented as C# visual studio project that includes real text files processing, building of document-term matrix with stop words filtering and stemming. | |||

* [http://deim.urv.cat/~sgomez/multidendrograms.php MultiDendrograms] An [[open source]] [[Java (programming language)|Java]] application for variable-group agglomerative hierarchical clustering, with [[graphical user interface]]. | |||

* [http://www.mathworks.com/matlabcentral/fileexchange/38018-graph-agglomerative-clustering-gac-toolbox Graph Agglomerative Clustering (GAC) toolbox] implemented several graph-based agglomerative clustering algorithms. | |||

=== Commercial === | |||

* [[MathWorks|MATLAB]] includes hierarchical cluster analysis. | |||

* [[SAS System|SAS]] includes hierarchical cluster analysis. | |||

* [[Mathematica]] includes a Hierarchical Clustering Package | |||

== See also == | |||

* [[Statistical distance]] | |||

* [[Cluster analysis]] | |||

* [[CURE data clustering algorithm]] | |||

* [[Dendrogram]] | |||

* [[Determining the number of clusters in a data set]] | |||

* [[Hierarchical clustering of networks]] | |||

* [[Nearest-neighbor chain algorithm]] | |||

* [[Numerical taxonomy]] | |||

* [[OPTICS algorithm]] | |||

* [[Nearest neighbor search]] | |||

* [[Locality-sensitive hashing]] | |||

== Notes == | |||

{{reflist}} | |||

== References and further reading == | |||

*{{cite book | |||

|last1=Kaufman|first1=L.|last2=Rousseeuw|first2=P.J. | |||

|year=1990 | |||

|title=Finding Groups in Data: An Introduction to Cluster Analysis |edition=1 | |||

|isbn= 0-471-87876-6 | |||

|publisher=John Wiley |location= New York | |||

}} | |||

*{{cite book | |||

|last1=Hastie|first1=Trevor|authorlink1=Trevor Hastie|last2=Tibshirani|first2=Robert|authorlink2=Robert Tibshirani|last3=Friedman|first3=Jerome | |||

|year=2009 | |||

|title=The Elements of Statistical Learning |edition=2nd | |||

|isbn=0-387-84857-6 | |||

|url=http://www-stat.stanford.edu/~tibs/ElemStatLearn/ |format=PDF |accessdate=2009-10-20 | |||

|publisher=Springer |location=New York | |||

|chapter=14.3.12 Hierarchical clustering |pages=520–528 | |||

}} | |||

*{{Cite book | last1=Press | first1=WH | last2=Teukolsky | first2=SA | last3=Vetterling | first3=WT | last4=Flannery | first4=BP | year=2007 | title=Numerical Recipes: The Art of Scientific Computing | edition=3rd | publisher=Cambridge University Press | publication-place=New York | isbn=978-0-521-88068-8 | chapter=Section 16.4. Hierarchical Clustering by Phylogenetic Trees | chapter-url=http://apps.nrbook.com/empanel/index.html#pg=868}} | |||

{{DEFAULTSORT:Hierarchical Clustering}} | |||

[[Category:Network analysis]] | |||

[[Category:Data clustering algorithms]] | |||

Revision as of 16:01, 12 December 2013

In data mining, hierarchical clustering is a method of cluster analysis which seeks to build a hierarchy of clusters. Strategies for hierarchical clustering generally fall into two types: Template:Cn

- Agglomerative: This is a "bottom up" approach: each observation starts in its own cluster, and pairs of clusters are merged as one moves up the hierarchy.

- Divisive: This is a "top down" approach: all observations start in one cluster, and splits are performed recursively as one moves down the hierarchy.

In general, the merges and splits are determined in a greedy manner. The results of hierarchical clustering are usually presented in a dendrogram.

In the general case, the complexity of agglomerative clustering is , which makes them too slow for large data sets. Divisive clustering with an exhaustive search is , which is even worse. However, for some special cases, optimal efficient agglomerative methods (of complexity ) are known: SLINK[1] for single-linkage and CLINK[2] for complete-linkage clustering.

Cluster dissimilarity

In order to decide which clusters should be combined (for agglomerative), or where a cluster should be split (for divisive), a measure of dissimilarity between sets of observations is required. In most methods of hierarchical clustering, this is achieved by use of an appropriate metric (a measure of distance between pairs of observations), and a linkage criterion which specifies the dissimilarity of sets as a function of the pairwise distances of observations in the sets.

Metric

The choice of an appropriate metric will influence the shape of the clusters, as some elements may be close to one another according to one distance and farther away according to another. For example, in a 2-dimensional space, the distance between the point (1,0) and the origin (0,0) is always 1 according to the usual norms, but the distance between the point (1,1) and the origin (0,0) can be 2, or 1 under Manhattan distance, Euclidean distance or maximum distance respectively.

Some commonly used metrics for hierarchical clustering are:[3]

| Names | Formula |

|---|---|

| Euclidean distance | |

| squared Euclidean distance | |

| Manhattan distance | |

| maximum distance | |

| Mahalanobis distance | where S is the covariance matrix |

| cosine similarity |

For text or other non-numeric data, metrics such as the Hamming distance or Levenshtein distance are often used.

A review of cluster analysis in health psychology research found that the most common distance measure in published studies in that research area is the Euclidean distance or the squared Euclidean distance.Potter or Ceramic Artist Truman Bedell from Rexton, has interests which include ceramics, best property developers in singapore developers in singapore and scrabble. Was especially enthused after visiting Alejandro de Humboldt National Park.

Linkage criteria

The linkage criterion determines the distance between sets of observations as a function of the pairwise distances between observations.

Some commonly used linkage criteria between two sets of observations A and B are:[4][5]

| Names | Formula |

|---|---|

| Maximum or complete linkage clustering | |

| Minimum or single-linkage clustering | |

| Mean or average linkage clustering, or UPGMA | |

| Minimum energy clustering |

where d is the chosen metric. Other linkage criteria include:

- The sum of all intra-cluster variance.

- The decrease in variance for the cluster being merged (Ward's criterion).[6]

- The probability that candidate clusters spawn from the same distribution function (V-linkage).

- The product of in-degree and out-degree on a k-nearest-neighbor graph (graph degree linkage).[7]

- The increment of some cluster descriptor (i.e., a quantity defined for measuring the quality of a cluster) after merging two clusters.[8] [9] [10]

Discussion

Hierarchical clustering has the distinct advantage that any valid measure of distance can be used. In fact, the observations themselves are not required: all that is used is a matrix of distances.

Example for Agglomerative Clustering

For example, suppose this data is to be clustered, and the Euclidean distance is the distance metric.

Cutting the tree at a given height will give a partitioning clustering at a selected precision. In this example, cutting after the second row of the dendrogram will yield clusters {a} {b c} {d e} {f}. Cutting after the third row will yield clusters {a} {b c} {d e f}, which is a coarser clustering, with a smaller number of larger clusters.

The hierarchical clustering dendrogram would be as such:

This method builds the hierarchy from the individual elements by progressively merging clusters. In our example, we have six elements {a} {b} {c} {d} {e} and {f}. The first step is to determine which elements to merge in a cluster. Usually, we want to take the two closest elements, according to the chosen distance.

Optionally, one can also construct a distance matrix at this stage, where the number in the i-th row j-th column is the distance between the i-th and j-th elements. Then, as clustering progresses, rows and columns are merged as the clusters are merged and the distances updated. This is a common way to implement this type of clustering, and has the benefit of caching distances between clusters. A simple agglomerative clustering algorithm is described in the single-linkage clustering page; it can easily be adapted to different types of linkage (see below).

Suppose we have merged the two closest elements b and c, we now have the following clusters {a}, {b, c}, {d}, {e} and {f}, and want to merge them further. To do that, we need to take the distance between {a} and {b c}, and therefore define the distance between two clusters. Usually the distance between two clusters and is one of the following:

- The maximum distance between elements of each cluster (also called complete-linkage clustering):

- The minimum distance between elements of each cluster (also called single-linkage clustering):

- The mean distance between elements of each cluster (also called average linkage clustering, used e.g. in UPGMA):

- The sum of all intra-cluster variance.

- The increase in variance for the cluster being merged (Ward's methodCite error: Invalid

<ref>tag; invalid names, e.g. too many) - The probability that candidate clusters spawn from the same distribution function (V-linkage).

Each agglomeration occurs at a greater distance between clusters than the previous agglomeration, and one can decide to stop clustering either when the clusters are too far apart to be merged (distance criterion) or when there is a sufficiently small number of clusters (number criterion).

Software

Open Source Frameworks

- Cluster 3.0 provides a nice Graphical User Interface to access to different clustering routines and is available for Windows, Mac OS X, Linux, Unix.

- ELKI includes multiple hierarchical clustering algorithms, various linkage strategies and also includes the efficient SLINK[1] algorithm, flexible cluster extraction from dendrograms and various other cluster analysis algorithms.

- Octave, the GNU analog to MATLAB implements hierarchical clustering in linkage function

- Orange, a free data mining software suite, module orngClustering for scripting in Python, or cluster analysis through visual programming.

- R has several functions for hierarchical clustering: see CRAN Task View: Cluster Analysis & Finite Mixture Models for more information.

- scikit-learn implements a hierarchical clustering based on the Ward algorithm only.

- Weka includes hierarchical cluster analysis.

Standalone implementations

- CrimeStat implements two hierarchical clustering routines, a nearest neighbor (Nnh) and a risk-adjusted(Rnnh).

- figue is a JavaScript package that implements some agglomerative clustering functions (single-linkage, complete-linkage, average-linkage) and functions to visualize clustering output (e.g. dendrograms).

- hcluster is a Python implementation, based on NumPy, which supports hierarchical clustering and plotting.

- Hierarchical Agglomerative Clustering implemented as C# visual studio project that includes real text files processing, building of document-term matrix with stop words filtering and stemming.

- MultiDendrograms An open source Java application for variable-group agglomerative hierarchical clustering, with graphical user interface.

- Graph Agglomerative Clustering (GAC) toolbox implemented several graph-based agglomerative clustering algorithms.

Commercial

- MATLAB includes hierarchical cluster analysis.

- SAS includes hierarchical cluster analysis.

- Mathematica includes a Hierarchical Clustering Package

See also

- Statistical distance

- Cluster analysis

- CURE data clustering algorithm

- Dendrogram

- Determining the number of clusters in a data set

- Hierarchical clustering of networks

- Nearest-neighbor chain algorithm

- Numerical taxonomy

- OPTICS algorithm

- Nearest neighbor search

- Locality-sensitive hashing

Notes

43 year old Petroleum Engineer Harry from Deep River, usually spends time with hobbies and interests like renting movies, property developers in singapore new condominium and vehicle racing. Constantly enjoys going to destinations like Camino Real de Tierra Adentro.

References and further reading

- 20 year-old Real Estate Agent Rusty from Saint-Paul, has hobbies and interests which includes monopoly, property developers in singapore and poker. Will soon undertake a contiki trip that may include going to the Lower Valley of the Omo.

My blog: http://www.primaboinca.com/view_profile.php?userid=5889534 - 20 year-old Real Estate Agent Rusty from Saint-Paul, has hobbies and interests which includes monopoly, property developers in singapore and poker. Will soon undertake a contiki trip that may include going to the Lower Valley of the Omo.

My blog: http://www.primaboinca.com/view_profile.php?userid=5889534 - 20 year-old Real Estate Agent Rusty from Saint-Paul, has hobbies and interests which includes monopoly, property developers in singapore and poker. Will soon undertake a contiki trip that may include going to the Lower Valley of the Omo.

My blog: http://www.primaboinca.com/view_profile.php?userid=5889534

- ↑ 1.0 1.1 One of the biggest reasons investing in a Singapore new launch is an effective things is as a result of it is doable to be lent massive quantities of money at very low interest rates that you should utilize to purchase it. Then, if property values continue to go up, then you'll get a really high return on funding (ROI). Simply make sure you purchase one of the higher properties, reminiscent of the ones at Fernvale the Riverbank or any Singapore landed property Get Earnings by means of Renting

In its statement, the singapore property listing - website link, government claimed that the majority citizens buying their first residence won't be hurt by the new measures. Some concessions can even be prolonged to chose teams of consumers, similar to married couples with a minimum of one Singaporean partner who are purchasing their second property so long as they intend to promote their first residential property. Lower the LTV limit on housing loans granted by monetary establishments regulated by MAS from 70% to 60% for property purchasers who are individuals with a number of outstanding housing loans on the time of the brand new housing purchase. Singapore Property Measures - 30 August 2010 The most popular seek for the number of bedrooms in Singapore is 4, followed by 2 and three. Lush Acres EC @ Sengkang

Discover out more about real estate funding in the area, together with info on international funding incentives and property possession. Many Singaporeans have been investing in property across the causeway in recent years, attracted by comparatively low prices. However, those who need to exit their investments quickly are likely to face significant challenges when trying to sell their property – and could finally be stuck with a property they can't sell. Career improvement programmes, in-house valuation, auctions and administrative help, venture advertising and marketing, skilled talks and traisning are continuously planned for the sales associates to help them obtain better outcomes for his or her shoppers while at Knight Frank Singapore. No change Present Rules

Extending the tax exemption would help. The exemption, which may be as a lot as $2 million per family, covers individuals who negotiate a principal reduction on their existing mortgage, sell their house short (i.e., for lower than the excellent loans), or take part in a foreclosure course of. An extension of theexemption would seem like a common-sense means to assist stabilize the housing market, but the political turmoil around the fiscal-cliff negotiations means widespread sense could not win out. Home Minority Chief Nancy Pelosi (D-Calif.) believes that the mortgage relief provision will be on the table during the grand-cut price talks, in response to communications director Nadeam Elshami. Buying or promoting of blue mild bulbs is unlawful.

A vendor's stamp duty has been launched on industrial property for the primary time, at rates ranging from 5 per cent to 15 per cent. The Authorities might be trying to reassure the market that they aren't in opposition to foreigners and PRs investing in Singapore's property market. They imposed these measures because of extenuating components available in the market." The sale of new dual-key EC models will even be restricted to multi-generational households only. The models have two separate entrances, permitting grandparents, for example, to dwell separately. The vendor's stamp obligation takes effect right this moment and applies to industrial property and plots which might be offered inside three years of the date of buy. JLL named Best Performing Property Brand for second year running

The data offered is for normal info purposes only and isn't supposed to be personalised investment or monetary advice. Motley Fool Singapore contributor Stanley Lim would not personal shares in any corporations talked about. Singapore private home costs increased by 1.eight% within the fourth quarter of 2012, up from 0.6% within the earlier quarter. Resale prices of government-built HDB residences which are usually bought by Singaporeans, elevated by 2.5%, quarter on quarter, the quickest acquire in five quarters. And industrial property, prices are actually double the levels of three years ago. No withholding tax in the event you sell your property. All your local information regarding vital HDB policies, condominium launches, land growth, commercial property and more

There are various methods to go about discovering the precise property. Some local newspapers (together with the Straits Instances ) have categorised property sections and many local property brokers have websites. Now there are some specifics to consider when buying a 'new launch' rental. Intended use of the unit Every sale begins with 10 p.c low cost for finish of season sale; changes to 20 % discount storewide; follows by additional reduction of fiftyand ends with last discount of 70 % or extra. Typically there is even a warehouse sale or transferring out sale with huge mark-down of costs for stock clearance. Deborah Regulation from Expat Realtor shares her property market update, plus prime rental residences and houses at the moment available to lease Esparina EC @ Sengkang - ↑ One of the biggest reasons investing in a Singapore new launch is an effective things is as a result of it is doable to be lent massive quantities of money at very low interest rates that you should utilize to purchase it. Then, if property values continue to go up, then you'll get a really high return on funding (ROI). Simply make sure you purchase one of the higher properties, reminiscent of the ones at Fernvale the Riverbank or any Singapore landed property Get Earnings by means of Renting

In its statement, the singapore property listing - website link, government claimed that the majority citizens buying their first residence won't be hurt by the new measures. Some concessions can even be prolonged to chose teams of consumers, similar to married couples with a minimum of one Singaporean partner who are purchasing their second property so long as they intend to promote their first residential property. Lower the LTV limit on housing loans granted by monetary establishments regulated by MAS from 70% to 60% for property purchasers who are individuals with a number of outstanding housing loans on the time of the brand new housing purchase. Singapore Property Measures - 30 August 2010 The most popular seek for the number of bedrooms in Singapore is 4, followed by 2 and three. Lush Acres EC @ Sengkang

Discover out more about real estate funding in the area, together with info on international funding incentives and property possession. Many Singaporeans have been investing in property across the causeway in recent years, attracted by comparatively low prices. However, those who need to exit their investments quickly are likely to face significant challenges when trying to sell their property – and could finally be stuck with a property they can't sell. Career improvement programmes, in-house valuation, auctions and administrative help, venture advertising and marketing, skilled talks and traisning are continuously planned for the sales associates to help them obtain better outcomes for his or her shoppers while at Knight Frank Singapore. No change Present Rules

Extending the tax exemption would help. The exemption, which may be as a lot as $2 million per family, covers individuals who negotiate a principal reduction on their existing mortgage, sell their house short (i.e., for lower than the excellent loans), or take part in a foreclosure course of. An extension of theexemption would seem like a common-sense means to assist stabilize the housing market, but the political turmoil around the fiscal-cliff negotiations means widespread sense could not win out. Home Minority Chief Nancy Pelosi (D-Calif.) believes that the mortgage relief provision will be on the table during the grand-cut price talks, in response to communications director Nadeam Elshami. Buying or promoting of blue mild bulbs is unlawful.

A vendor's stamp duty has been launched on industrial property for the primary time, at rates ranging from 5 per cent to 15 per cent. The Authorities might be trying to reassure the market that they aren't in opposition to foreigners and PRs investing in Singapore's property market. They imposed these measures because of extenuating components available in the market." The sale of new dual-key EC models will even be restricted to multi-generational households only. The models have two separate entrances, permitting grandparents, for example, to dwell separately. The vendor's stamp obligation takes effect right this moment and applies to industrial property and plots which might be offered inside three years of the date of buy. JLL named Best Performing Property Brand for second year running

The data offered is for normal info purposes only and isn't supposed to be personalised investment or monetary advice. Motley Fool Singapore contributor Stanley Lim would not personal shares in any corporations talked about. Singapore private home costs increased by 1.eight% within the fourth quarter of 2012, up from 0.6% within the earlier quarter. Resale prices of government-built HDB residences which are usually bought by Singaporeans, elevated by 2.5%, quarter on quarter, the quickest acquire in five quarters. And industrial property, prices are actually double the levels of three years ago. No withholding tax in the event you sell your property. All your local information regarding vital HDB policies, condominium launches, land growth, commercial property and more

There are various methods to go about discovering the precise property. Some local newspapers (together with the Straits Instances ) have categorised property sections and many local property brokers have websites. Now there are some specifics to consider when buying a 'new launch' rental. Intended use of the unit Every sale begins with 10 p.c low cost for finish of season sale; changes to 20 % discount storewide; follows by additional reduction of fiftyand ends with last discount of 70 % or extra. Typically there is even a warehouse sale or transferring out sale with huge mark-down of costs for stock clearance. Deborah Regulation from Expat Realtor shares her property market update, plus prime rental residences and houses at the moment available to lease Esparina EC @ Sengkang - ↑ Template:Cite web

- ↑ Template:Cite web

- ↑ Székely, G. J. and Rizzo, M. L. (2005) Hierarchical clustering via Joint Between-Within Distances: Extending Ward's Minimum Variance Method, Journal of Classification 22, 151-183.

- ↑ One of the biggest reasons investing in a Singapore new launch is an effective things is as a result of it is doable to be lent massive quantities of money at very low interest rates that you should utilize to purchase it. Then, if property values continue to go up, then you'll get a really high return on funding (ROI). Simply make sure you purchase one of the higher properties, reminiscent of the ones at Fernvale the Riverbank or any Singapore landed property Get Earnings by means of Renting

In its statement, the singapore property listing - website link, government claimed that the majority citizens buying their first residence won't be hurt by the new measures. Some concessions can even be prolonged to chose teams of consumers, similar to married couples with a minimum of one Singaporean partner who are purchasing their second property so long as they intend to promote their first residential property. Lower the LTV limit on housing loans granted by monetary establishments regulated by MAS from 70% to 60% for property purchasers who are individuals with a number of outstanding housing loans on the time of the brand new housing purchase. Singapore Property Measures - 30 August 2010 The most popular seek for the number of bedrooms in Singapore is 4, followed by 2 and three. Lush Acres EC @ Sengkang

Discover out more about real estate funding in the area, together with info on international funding incentives and property possession. Many Singaporeans have been investing in property across the causeway in recent years, attracted by comparatively low prices. However, those who need to exit their investments quickly are likely to face significant challenges when trying to sell their property – and could finally be stuck with a property they can't sell. Career improvement programmes, in-house valuation, auctions and administrative help, venture advertising and marketing, skilled talks and traisning are continuously planned for the sales associates to help them obtain better outcomes for his or her shoppers while at Knight Frank Singapore. No change Present Rules

Extending the tax exemption would help. The exemption, which may be as a lot as $2 million per family, covers individuals who negotiate a principal reduction on their existing mortgage, sell their house short (i.e., for lower than the excellent loans), or take part in a foreclosure course of. An extension of theexemption would seem like a common-sense means to assist stabilize the housing market, but the political turmoil around the fiscal-cliff negotiations means widespread sense could not win out. Home Minority Chief Nancy Pelosi (D-Calif.) believes that the mortgage relief provision will be on the table during the grand-cut price talks, in response to communications director Nadeam Elshami. Buying or promoting of blue mild bulbs is unlawful.

A vendor's stamp duty has been launched on industrial property for the primary time, at rates ranging from 5 per cent to 15 per cent. The Authorities might be trying to reassure the market that they aren't in opposition to foreigners and PRs investing in Singapore's property market. They imposed these measures because of extenuating components available in the market." The sale of new dual-key EC models will even be restricted to multi-generational households only. The models have two separate entrances, permitting grandparents, for example, to dwell separately. The vendor's stamp obligation takes effect right this moment and applies to industrial property and plots which might be offered inside three years of the date of buy. JLL named Best Performing Property Brand for second year running

The data offered is for normal info purposes only and isn't supposed to be personalised investment or monetary advice. Motley Fool Singapore contributor Stanley Lim would not personal shares in any corporations talked about. Singapore private home costs increased by 1.eight% within the fourth quarter of 2012, up from 0.6% within the earlier quarter. Resale prices of government-built HDB residences which are usually bought by Singaporeans, elevated by 2.5%, quarter on quarter, the quickest acquire in five quarters. And industrial property, prices are actually double the levels of three years ago. No withholding tax in the event you sell your property. All your local information regarding vital HDB policies, condominium launches, land growth, commercial property and more

There are various methods to go about discovering the precise property. Some local newspapers (together with the Straits Instances ) have categorised property sections and many local property brokers have websites. Now there are some specifics to consider when buying a 'new launch' rental. Intended use of the unit Every sale begins with 10 p.c low cost for finish of season sale; changes to 20 % discount storewide; follows by additional reduction of fiftyand ends with last discount of 70 % or extra. Typically there is even a warehouse sale or transferring out sale with huge mark-down of costs for stock clearance. Deborah Regulation from Expat Realtor shares her property market update, plus prime rental residences and houses at the moment available to lease Esparina EC @ Sengkang - ↑ Zhang, et al. "Graph degree linkage: Agglomerative clustering on a directed graph." 12th European Conference on Computer Vision, Florence, Italy, October 7-13, 2012. http://arxiv.org/abs/1208.5092

- ↑ Zhang, et al. "Agglomerative clustering via maximum incremental path integral." Pattern Recognition (2013).

- ↑ Zhao, and Tang. "Cyclizing clusters via zeta function of a graph."Advances in Neural Information Processing Systems. 2008.

- ↑ Ma, et al. "Segmentation of multivariate mixed data via lossy data coding and compression." IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(9) (2007): 1546-1562.