Bulk modulus: Difference between revisions

No edit summary |

en>Hmains m copyedit, MOS value rules and AWB general fixes using AWB |

||

| Line 1: | Line 1: | ||

In [[mathematics]], '''approximation theory''' is concerned with how [[function (mathematics)|function]]s can best be [[approximation|approximated]] with simpler [[function (mathematics)|functions]], and with [[Quantitative property|quantitative]]ly [[characterization (mathematics)|characterizing]] the [[approximation error|errors]] introduced thereby. Note that what is meant by ''best'' and ''simpler'' will depend on the application. | |||

A closely related topic is the approximation of functions by [[generalized Fourier series]], that is, approximations based upon summation of a series of terms based upon [[orthogonal polynomials]]. | |||

One problem of particular interest is that of approximating a function in a [[computer]] mathematical library, using operations that can be performed on the computer or calculator (e.g. addition and multiplication), such that the result is as close to the actual function as possible. This is typically done with [[polynomial]] or [[Rational function|rational]] (ratio of polynomials) approximations. | |||

The objective is to make the approximation as close as possible to the actual function, typically with an accuracy close to that of the underlying computer's [[floating point]] arithmetic. This is accomplished by using a polynomial of high [[Degree of a polynomial|degree]], and/or narrowing the domain over which the polynomial has to approximate the function. | |||

Narrowing the domain can often be done through the use of various addition or scaling formulas for the function being approximated. Modern mathematical libraries often reduce the domain into many tiny segments and use a low-degree polynomial for each segment. | |||

{|style="float:right" | |||

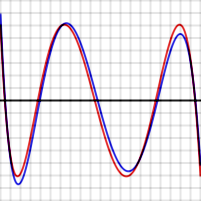

| [[Image:Logerror.png|thumb|300px|Error between optimal polynomial and log(x) (red), and Chebyshev approximation and log(x) (blue) over the interval [2, 4]. Vertical divisions are 10<sup>−5</sup>. Maximum error for the optimal polynomial is 6.07 x 10<sup>−5</sup>.]] | |||

| [[Image:Experror.png|thumb|300px|Error between optimal polynomial and exp(x) (red), and Chebyshev approximation and exp(x) (blue) over the interval [−1, 1]. Vertical divisions are 10<sup>−4</sup>. Maximum error for the optimal polynomial is 5.47 x 10<sup>−4</sup>.]] | |||

|} | |||

==Optimal polynomials== | |||

Once the domain and degree of the polynomial are chosen, the polynomial itself is chosen in such a way as to minimize the worst-case error. That is, the goal is to minimize the maximum value of <math>\mid P(x)-f(x)\mid</math>, where ''P''(''x'') is the approximating polynomial and ''f''(''x'') is the actual function. For well-behaved functions, there exists an ''N''<span style="padding-left:0.1em;">th</span>-degree polynomial that will lead to an error curve that oscillates back and forth between <math>+\varepsilon</math> and <math>-\varepsilon</math> a total of ''N''+2 times, giving a worst-case error of <math>\varepsilon</math>. It is seen that an ''N''<span style="padding-left:0.1em;">th</span>-degree polynomial can interpolate ''N''+1 points in a curve. Such a polynomial is always optimal. It is possible to make contrived functions ''f''(''x'') for which no such polynomial exists, but these occur rarely in practice. | |||

For example the graphs shown to the right show the error in approximating log(x) and exp(x) for ''N'' = 4. The red curves, for the optimal polynomial, are '''level''', that is, they oscillate between <math>+\varepsilon</math> and <math>-\varepsilon</math> exactly. Note that, in each case, the number of extrema is ''N''+2, that is, 6. Two of the extrema are at the end points of the interval, at the left and right edges of the graphs. | |||

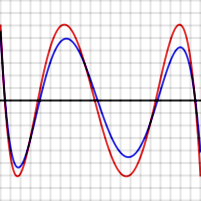

[[Image:Impossibleerror.png|thumb|right|300px|Error ''P''(''x'') − ''f''(''x'') for level polynomial (red), and for purported better polynomial (blue)]] To prove this is true in general, suppose ''P'' is a polynomial of degree ''N'' having the property described, that is, it gives rise to an error function that has ''N'' + 2 extrema, of alternating signs and equal magnitudes. The red graph to the right shows what this error function might look like for ''N'' = 4. Suppose ''Q''(''x'') (whose error function is shown in blue to the right) is another ''N''-degree polynomial that is a better approximation to ''f'' than ''P''. In particular, ''Q'' is closer to ''f'' than ''P'' for each value ''x<sub>i</sub>'' where an extreme of ''P''−''f'' occurs, so | |||

:<math>|Q(x_i)-f(x_i)|<|P(x_i)-f(x_i)|.</math> | |||

When a maximum of ''P''−''f'' occurs at ''x<sub>i</sub>'', then | |||

:<math>Q(x_i)-f(x_i)\le|Q(x_i)-f(x_i)|<|P(x_i)-f(x_i)|=P(x_i)-f(x_i),</math> | |||

And when a minimum of ''P''−''f'' occurs at ''x<sub>i</sub>'', then | |||

:<math>f(x_i)-Q(x_i)\le|Q(x_i)-f(x_i)|<|P(x_i)-f(x_i)|=f(x_i)-P(x_i).</math> | |||

So, as can be seen in the graph, [''P''(''x'') − ''f''(''x'')] − [''Q''(''x'') − ''f''(''x'')] must alternate in sign for the ''N'' + 2 values of ''x<sub>i</sub>''. But [''P''(''x'') − ''f''(''x'')] − [''Q''(''x'') − ''f''(''x'')] reduces to ''P''(''x'') − ''Q''(''x'') which is a polynomial of degree ''N''. This function changes sign at least ''N''+1 times so, by the [[Intermediate value theorem]], it has ''N''+1 zeroes, which is impossible for a polynomial of degree ''N''. | |||

==Chebyshev approximation== | |||

One can obtain polynomials very close to the optimal one by expanding the given function in terms of [[Chebyshev polynomials]] and then cutting off the expansion at the desired degree. | |||

This is similar to the [[Harmonic analysis|Fourier analysis]] of the function, using the Chebyshev polynomials instead of the usual trigonometric functions. | |||

If one calculates the coefficients in the Chebyshev expansion for a function: | |||

:<math>f(x) \sim \sum_{i=0}^\infty c_i T_i(x)</math> | |||

and then cuts off the series after the <math>T_N</math> term, one gets an ''N''<span style="padding-left:0.1em;">th</span>-degree polynomial approximating ''f''(''x''). | |||

The reason this polynomial is nearly optimal is that, for functions with rapidly converging power series, if the series is cut off after some term, the total error arising from the cutoff is close to the first term after the cutoff. That is, the first term after the cutoff dominates all later terms. The same is true if the expansion is in terms of Chebyshev polynomials. If a Chebyshev expansion is cut off after <math>T_N</math>, the error will take a form close to a multiple of <math>T_{N+1}</math>. The Chebyshev polynomials have the property that they are level – they oscillate between +1 and −1 in the interval [−1, 1]. <math>T_{N+1}</math> has ''N''+2 level extrema. This means that the error between ''f''(''x'') and its Chebyshev expansion out to <math>T_N</math> is close to a level function with ''N''+2 extrema, so it is close to the optimal ''N''<span style="padding-left:0.1em;">th</span>-degree polynomial. | |||

In the graphs above, note that the blue error function is sometimes better than (inside of) the red function, but sometimes worse, meaning that it is not quite the optimal polynomial. Note also that the discrepancy is less serious for the exp function, which has an extremely rapidly converging power series, than for the log function. | |||

Chebyshev approximation is the basis for [[Clenshaw–Curtis quadrature]], a [[numerical integration]] technique. | |||

==Remez' algorithm== | |||

The [[Remez algorithm]] (sometimes spelled Remes) is used to produce an optimal polynomial ''P''(''x'') approximating a given function ''f''(''x'') over a given interval. It is an iterative algorithm that converges to a polynomial that has an error function with ''N''+2 level extrema. By the theorem above, that polynomial is optimal. | |||

Remez' algorithm uses the fact that one can construct an ''N''<span style="padding-left:0.1em;">th</span>-degree polynomial that leads to level and alternating error values, given ''N''+2 test points. | |||

Given ''N''+2 test points <math>x_1</math>, <math>x_2</math>, ... <math>x_{N+2}</math> (where <math>x_1</math> and <math>x_{N+2}</math> are presumably the end points of the interval of approximation), these equations need to be solved: | |||

:<math>P(x_1) - f(x_1) = + \varepsilon\,</math> | |||

:<math>P(x_2) - f(x_2) = - \varepsilon\,</math> | |||

:<math>P(x_3) - f(x_3) = + \varepsilon\,</math> | |||

:<math>\vdots</math> | |||

:<math>P(x_{N+2}) - f(x_{N+2}) = \pm \varepsilon.\,</math> | |||

The right-hand sides alternate in sign. | |||

That is, | |||

:<math>P_0 + P_1 x_1 + P_2 x_1^2 + P_3 x_1^3 + \dots + P_N x_1^N - f(x_1) = + \varepsilon\,</math> | |||

:<math>P_0 + P_1 x_2 + P_2 x_2^2 + P_3 x_2^3 + \dots + P_N x_2^N - f(x_2) = - \varepsilon\,</math> | |||

:<math>\vdots</math> | |||

Since <math>x_1</math>, ..., <math>x_{N+2}</math> were given, all of their powers are known, and <math>f(x_1)</math>, ..., <math>f(x_{N+2})</math> are also known. That means that the above equations are just ''N''+2 linear equations in the ''N''+2 variables <math>P_0</math>, <math>P_1</math>, ..., <math>P_N</math>, and <math>\varepsilon</math>. Given the test points <math>x_1</math>, ..., <math>x_{N+2}</math>, one can solve this system to get the polynomial ''P'' and the number <math>\varepsilon</math>. | |||

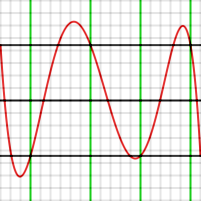

The graph below shows an example of this, producing a fourth-degree polynomial approximating <math>e^x</math> over [−1, 1]. The test points were set at | |||

−1, −0.7, −0.1, +0.4, +0.9, and 1. Those values are shown in green. The resultant value of <math>\varepsilon</math> is 4.43 x 10<sup>−4</sup> | |||

[[Image:Remesdemo.png|thumb|center|300px|Error of the polynomial produced by the first step of Remez' algorithm, approximating e<sup>x</sup> over the interval [−1, 1]. Vertical divisions are 10<sup>−4</sup>.]] | |||

Note that the error graph does indeed take on the values <math>\pm \varepsilon</math> at the six test points, including the end points, but that those points are not extrema. If the four interior test points had been extrema (that is, the function ''P''(''x'')''f''(''x'') had maxima or minima there), the polynomial would be optimal. | |||

The second step of Remez' algorithm consists of moving the test points to the approximate locations where the error function had its actual local maxima or minima. For example, one can tell from looking at the graph that the point at −0.1 should have been at about −0.28. | |||

The way to do this in the algorithm is to use a single round of | |||

[[Newton's method]]. Since one knows the first and second derivatives of ''P''(''x'')−''f''(''x''), one can calculate approximately how far a test point has to be moved so that the derivative will be zero. | |||

:Calculating the derivatives of a polynomial is straightforward. One must also be able to calculate the first and second derivatives of ''f''(''x''). Remez' algorithm requires an ability to calculate <math>f(x)\,</math>, <math>f'(x)\,</math>, and <math>f''(x)\,</math> to extremely high precision. The entire algorithm must be carried out to higher precision than the desired precision of the result. | |||

After moving the test points, the linear equation part is repeated, getting a new polynomial, and Newton's method is used again to move the test points again. This sequence is continued until the result converges to the desired accuracy. The algorithm converges very rapidly. | |||

Convergence is quadratic for well-behaved functions—if the test points are within <math>10^{-15}</math> of the correct result, they will be approximately within <math>10^{-30}</math> of the correct result after the next round. | |||

Remez' algorithm is typically started by choosing the extrema of the Chebyshev polynomial <math>T_{N+1}</math> as the initial points, since the final error function will be similar to that polynomial. | |||

==Main journals== | |||

* [[Journal of Approximation Theory]] | |||

* [[Constructive Approximation]] | |||

* [[East Journal on Approximations]] | |||

==See also== | |||

*[[Chebyshev polynomials]] | |||

*[[Generalized Fourier series]] | |||

*[[Orthogonal polynomials]] | |||

*[[Orthonormal basis]] | |||

*[[Fourier series]] | |||

*[[Schauder basis]] | |||

*[[Padé approximant]] | |||

==References== | |||

* N. I. Achiezer (Akhiezer), Theory of approximation, Translated by Charles J. Hyman Frederick Ungar Publishing Co., New York 1956 x+307 pp. | |||

* A. F. Timan, ''Theory of approximation of functions of a real variable'', 1963 ISBN 0-486-67830-X | |||

* C. Hastings, Jr. ''Approximations for Digital Computers''. Princeton University Press, 1955. | |||

* J. F. Hart, E. W. Cheney, C. L. Lawson, H. J. Maehly, C. K. Mesztenyi, [[John R. Rice (professor)|J. R. Rice]], H. C. Thacher Jr., C. Witzgall, ''Computer Approximations''. Wiley, 1968, Lib. Cong. 67-23326. | |||

* L. Fox and I. B. Parker. "Chebyshev Polynomials in Numerical Analysis." Oxford University Press London, 1968. | |||

* {{Citation | last1=Press | first1=WH | last2=Teukolsky | first2=SA | last3=Vetterling | first3=WT | last4=Flannery | first4=BP | year=2007 | title=Numerical Recipes: The Art of Scientific Computing | edition=3rd | publisher=Cambridge University Press | publication-place=New York | isbn=978-0-521-88068-8 | chapter=Section 5.8. Chebyshev Approximation | chapter-url=http://apps.nrbook.com/empanel/index.html?pg=233}} | |||

* W. J. Cody Jr., W. Waite, ''Software Manual for the Elementary Functions''. Prentice-Hall, 1980, ISBN 0-13-822064-6. | |||

* E. Remes [Remez], "Sur le calcul effectif des polynomes d'approximation de Tschebyscheff". 1934 ''C. R. Acad. Sci.'', Paris, '''199''', 337-340. | |||

* K.-G. Steffens, "The History of Approximation Theory: From Euler to Bernstein," Birkhauser, Boston 2006 ISBN 0-8176-4353-2. | |||

* [[Tamas Erdelyi (mathematician)|T. Erdélyi]], "Extensions of the Bloch-Pólya theorem on the number of distinct real zeros of polynomials", ''Journal de théorie des nombres de Bordeaux'' '''20''' (2008), 281–287. | |||

* T. Erdélyi, "The Remez inequality for linear combinations of shifted Gaussians", ''Math. Proc. Cambridge Phil. Soc.'' '''146''' (2009), 523–530. | |||

* L. N. Trefethen, "Approximation theory and approximation practice", SIAM 2013. [http://www2.maths.ox.ac.uk/chebfun/ATAP/] | |||

==External links== | |||

*[http://www.math.technion.ac.il/hat/ History of Approximation Theory (HAT)] | |||

*[http://www.emis.de/journals/SAT/ Surveys in Approximation Theory (SAT)] | |||

[[Category:Approximation theory|*]] | |||

[[Category:Numerical analysis]] | |||

Revision as of 05:34, 3 February 2014

In mathematics, approximation theory is concerned with how functions can best be approximated with simpler functions, and with quantitatively characterizing the errors introduced thereby. Note that what is meant by best and simpler will depend on the application.

A closely related topic is the approximation of functions by generalized Fourier series, that is, approximations based upon summation of a series of terms based upon orthogonal polynomials.

One problem of particular interest is that of approximating a function in a computer mathematical library, using operations that can be performed on the computer or calculator (e.g. addition and multiplication), such that the result is as close to the actual function as possible. This is typically done with polynomial or rational (ratio of polynomials) approximations.

The objective is to make the approximation as close as possible to the actual function, typically with an accuracy close to that of the underlying computer's floating point arithmetic. This is accomplished by using a polynomial of high degree, and/or narrowing the domain over which the polynomial has to approximate the function. Narrowing the domain can often be done through the use of various addition or scaling formulas for the function being approximated. Modern mathematical libraries often reduce the domain into many tiny segments and use a low-degree polynomial for each segment.

Optimal polynomials

Once the domain and degree of the polynomial are chosen, the polynomial itself is chosen in such a way as to minimize the worst-case error. That is, the goal is to minimize the maximum value of , where P(x) is the approximating polynomial and f(x) is the actual function. For well-behaved functions, there exists an Nth-degree polynomial that will lead to an error curve that oscillates back and forth between and a total of N+2 times, giving a worst-case error of . It is seen that an Nth-degree polynomial can interpolate N+1 points in a curve. Such a polynomial is always optimal. It is possible to make contrived functions f(x) for which no such polynomial exists, but these occur rarely in practice.

For example the graphs shown to the right show the error in approximating log(x) and exp(x) for N = 4. The red curves, for the optimal polynomial, are level, that is, they oscillate between and exactly. Note that, in each case, the number of extrema is N+2, that is, 6. Two of the extrema are at the end points of the interval, at the left and right edges of the graphs.

To prove this is true in general, suppose P is a polynomial of degree N having the property described, that is, it gives rise to an error function that has N + 2 extrema, of alternating signs and equal magnitudes. The red graph to the right shows what this error function might look like for N = 4. Suppose Q(x) (whose error function is shown in blue to the right) is another N-degree polynomial that is a better approximation to f than P. In particular, Q is closer to f than P for each value xi where an extreme of P−f occurs, so

When a maximum of P−f occurs at xi, then

And when a minimum of P−f occurs at xi, then

So, as can be seen in the graph, [P(x) − f(x)] − [Q(x) − f(x)] must alternate in sign for the N + 2 values of xi. But [P(x) − f(x)] − [Q(x) − f(x)] reduces to P(x) − Q(x) which is a polynomial of degree N. This function changes sign at least N+1 times so, by the Intermediate value theorem, it has N+1 zeroes, which is impossible for a polynomial of degree N.

Chebyshev approximation

One can obtain polynomials very close to the optimal one by expanding the given function in terms of Chebyshev polynomials and then cutting off the expansion at the desired degree. This is similar to the Fourier analysis of the function, using the Chebyshev polynomials instead of the usual trigonometric functions.

If one calculates the coefficients in the Chebyshev expansion for a function:

and then cuts off the series after the term, one gets an Nth-degree polynomial approximating f(x).

The reason this polynomial is nearly optimal is that, for functions with rapidly converging power series, if the series is cut off after some term, the total error arising from the cutoff is close to the first term after the cutoff. That is, the first term after the cutoff dominates all later terms. The same is true if the expansion is in terms of Chebyshev polynomials. If a Chebyshev expansion is cut off after , the error will take a form close to a multiple of . The Chebyshev polynomials have the property that they are level – they oscillate between +1 and −1 in the interval [−1, 1]. has N+2 level extrema. This means that the error between f(x) and its Chebyshev expansion out to is close to a level function with N+2 extrema, so it is close to the optimal Nth-degree polynomial.

In the graphs above, note that the blue error function is sometimes better than (inside of) the red function, but sometimes worse, meaning that it is not quite the optimal polynomial. Note also that the discrepancy is less serious for the exp function, which has an extremely rapidly converging power series, than for the log function.

Chebyshev approximation is the basis for Clenshaw–Curtis quadrature, a numerical integration technique.

Remez' algorithm

The Remez algorithm (sometimes spelled Remes) is used to produce an optimal polynomial P(x) approximating a given function f(x) over a given interval. It is an iterative algorithm that converges to a polynomial that has an error function with N+2 level extrema. By the theorem above, that polynomial is optimal.

Remez' algorithm uses the fact that one can construct an Nth-degree polynomial that leads to level and alternating error values, given N+2 test points.

Given N+2 test points , , ... (where and are presumably the end points of the interval of approximation), these equations need to be solved:

The right-hand sides alternate in sign.

That is,

Since , ..., were given, all of their powers are known, and , ..., are also known. That means that the above equations are just N+2 linear equations in the N+2 variables , , ..., , and . Given the test points , ..., , one can solve this system to get the polynomial P and the number .

The graph below shows an example of this, producing a fourth-degree polynomial approximating over [−1, 1]. The test points were set at −1, −0.7, −0.1, +0.4, +0.9, and 1. Those values are shown in green. The resultant value of is 4.43 x 10−4

Note that the error graph does indeed take on the values at the six test points, including the end points, but that those points are not extrema. If the four interior test points had been extrema (that is, the function P(x)f(x) had maxima or minima there), the polynomial would be optimal.

The second step of Remez' algorithm consists of moving the test points to the approximate locations where the error function had its actual local maxima or minima. For example, one can tell from looking at the graph that the point at −0.1 should have been at about −0.28. The way to do this in the algorithm is to use a single round of Newton's method. Since one knows the first and second derivatives of P(x)−f(x), one can calculate approximately how far a test point has to be moved so that the derivative will be zero.

- Calculating the derivatives of a polynomial is straightforward. One must also be able to calculate the first and second derivatives of f(x). Remez' algorithm requires an ability to calculate , , and to extremely high precision. The entire algorithm must be carried out to higher precision than the desired precision of the result.

After moving the test points, the linear equation part is repeated, getting a new polynomial, and Newton's method is used again to move the test points again. This sequence is continued until the result converges to the desired accuracy. The algorithm converges very rapidly. Convergence is quadratic for well-behaved functions—if the test points are within of the correct result, they will be approximately within of the correct result after the next round.

Remez' algorithm is typically started by choosing the extrema of the Chebyshev polynomial as the initial points, since the final error function will be similar to that polynomial.

Main journals

See also

- Chebyshev polynomials

- Generalized Fourier series

- Orthogonal polynomials

- Orthonormal basis

- Fourier series

- Schauder basis

- Padé approximant

References

- N. I. Achiezer (Akhiezer), Theory of approximation, Translated by Charles J. Hyman Frederick Ungar Publishing Co., New York 1956 x+307 pp.

- A. F. Timan, Theory of approximation of functions of a real variable, 1963 ISBN 0-486-67830-X

- C. Hastings, Jr. Approximations for Digital Computers. Princeton University Press, 1955.

- J. F. Hart, E. W. Cheney, C. L. Lawson, H. J. Maehly, C. K. Mesztenyi, J. R. Rice, H. C. Thacher Jr., C. Witzgall, Computer Approximations. Wiley, 1968, Lib. Cong. 67-23326.

- L. Fox and I. B. Parker. "Chebyshev Polynomials in Numerical Analysis." Oxford University Press London, 1968.

- Many property agents need to declare for the PIC grant in Singapore. However, not all of them know find out how to do the correct process for getting this PIC scheme from the IRAS. There are a number of steps that you need to do before your software can be approved.

Naturally, you will have to pay a safety deposit and that is usually one month rent for annually of the settlement. That is the place your good religion deposit will likely be taken into account and will kind part or all of your security deposit. Anticipate to have a proportionate amount deducted out of your deposit if something is discovered to be damaged if you move out. It's best to you'll want to test the inventory drawn up by the owner, which can detail all objects in the property and their condition. If you happen to fail to notice any harm not already mentioned within the inventory before transferring in, you danger having to pay for it yourself.

In case you are in search of an actual estate or Singapore property agent on-line, you simply should belief your intuition. It's because you do not know which agent is nice and which agent will not be. Carry out research on several brokers by looking out the internet. As soon as if you end up positive that a selected agent is dependable and reliable, you can choose to utilize his partnerise in finding you a home in Singapore. Most of the time, a property agent is taken into account to be good if he or she locations the contact data on his website. This may mean that the agent does not mind you calling them and asking them any questions relating to new properties in singapore in Singapore. After chatting with them you too can see them in their office after taking an appointment.

Have handed an trade examination i.e Widespread Examination for House Brokers (CEHA) or Actual Property Agency (REA) examination, or equal; Exclusive brokers are extra keen to share listing information thus making certain the widest doable coverage inside the real estate community via Multiple Listings and Networking. Accepting a severe provide is simpler since your agent is totally conscious of all advertising activity related with your property. This reduces your having to check with a number of agents for some other offers. Price control is easily achieved. Paint work in good restore-discuss with your Property Marketing consultant if main works are still to be done. Softening in residential property prices proceed, led by 2.8 per cent decline within the index for Remainder of Central Region

Once you place down the one per cent choice price to carry down a non-public property, it's important to accept its situation as it is whenever you move in – faulty air-con, choked rest room and all. Get round this by asking your agent to incorporate a ultimate inspection clause within the possibility-to-buy letter. HDB flat patrons routinely take pleasure in this security net. "There's a ultimate inspection of the property two days before the completion of all HDB transactions. If the air-con is defective, you can request the seller to repair it," says Kelvin.

15.6.1 As the agent is an intermediary, generally, as soon as the principal and third party are introduced right into a contractual relationship, the agent drops out of the image, subject to any problems with remuneration or indemnification that he could have against the principal, and extra exceptionally, against the third occasion. Generally, agents are entitled to be indemnified for all liabilities reasonably incurred within the execution of the brokers´ authority.

To achieve the very best outcomes, you must be always updated on market situations, including past transaction information and reliable projections. You could review and examine comparable homes that are currently available in the market, especially these which have been sold or not bought up to now six months. You'll be able to see a pattern of such report by clicking here It's essential to defend yourself in opposition to unscrupulous patrons. They are often very skilled in using highly unethical and manipulative techniques to try and lure you into a lure. That you must also protect your self, your loved ones, and personal belongings as you'll be serving many strangers in your home. Sign a listing itemizing of all of the objects provided by the proprietor, together with their situation. HSR Prime Recruiter 2010 - W. J. Cody Jr., W. Waite, Software Manual for the Elementary Functions. Prentice-Hall, 1980, ISBN 0-13-822064-6.

- E. Remes [Remez], "Sur le calcul effectif des polynomes d'approximation de Tschebyscheff". 1934 C. R. Acad. Sci., Paris, 199, 337-340.

- K.-G. Steffens, "The History of Approximation Theory: From Euler to Bernstein," Birkhauser, Boston 2006 ISBN 0-8176-4353-2.

- T. Erdélyi, "Extensions of the Bloch-Pólya theorem on the number of distinct real zeros of polynomials", Journal de théorie des nombres de Bordeaux 20 (2008), 281–287.

- T. Erdélyi, "The Remez inequality for linear combinations of shifted Gaussians", Math. Proc. Cambridge Phil. Soc. 146 (2009), 523–530.

- L. N. Trefethen, "Approximation theory and approximation practice", SIAM 2013. [1]