Ornstein isomorphism theorem: Difference between revisions

en>Michael Hardy →Further reading: deleting the "stub" tag |

en>Yobot m →History: clean up, References after punctuation per WP:REFPUNC and WP:CITEFOOT using AWB (8792) |

||

| Line 1: | Line 1: | ||

In [[probability and statistics]] a '''Markov renewal process''' is a [[stochastic process|random process]] that generalizes the notion of [[Markov property|Markov]] jump processes. Other random processes like [[Markov chain]], [[Poisson process]], and [[renewal process]] can be derived as a special case of an MRP (Markov renewal process). | |||

==Definition== | |||

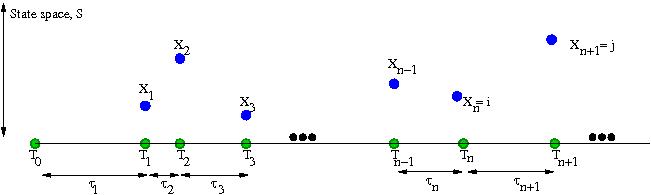

Consider a state space <math>\mathrm{S}.</math> Consider a set of random variables <math>(X_n,T_n)</math>, where <math>T_n</math> are the jump times and <math>X_n</math> are the associated states in the [[Markov chain]] (see Figure). Let the inter-arrival time, <math>\tau_n=T_n-T_{n-1}</math>. Then the sequence (X<sub>''n''</sub>, T<sub>''n''</sub>) is called a Markov renewal process if | |||

:<math>\Pr(\tau_{n+1}\le t, X_{n+1}=j|(X_0, T_0), (X_1, T_1),\ldots, (X_n=i, T_n)) </math> | |||

:<math> =\Pr(\tau_{n+1}\le t, X_{n+1}=j|X_n=i)\, \forall n \ge1,t\ge0, i,j \in \mathrm{S} </math> | |||

[[File:Markov renewal process - Depiction.jpg|center|600px|An illustration of a Markov renewal process]] | |||

==Relation to other stochastic processes== | |||

# If we define a new stochastic process <math>Y_t:=X_n</math> for <math>t \in [T_n,T_{n+1})</math>, then the process <math>Y_t</math> is called a semi-Markov process. Note the main difference between an MRP and a semi-Markov process is that the former is defined as a two-[[tuple]] of states and times, whereas the latter is the actual random process that evolves over time. The entire process is not Markovian, i.e., memoryless, as happens in a [[CTMC]]. Instead the process is Markovian only at the specified jump instants. This is the rationale behind the name, '''''Semi'''''-Markov.<ref>{{cite book|last=Medhi|first=J.|title=Stochastic processes|year=1982|publisher=Wiley & Sons|location=New York|isbn=978-0-470-27000-4}}</ref><ref>{{cite book|last=Ross|first=Sheldon M.|title=Stochastic processes.|year=1999|publisher=Routledge.|location=New York [u.a.]|isbn=978-0-471-12062-9|edition=2nd ed.}}</ref><ref>{{cite book|last=Barbu|first=Vlad Stefan|title=Semi-Markov chains and hidden semi-Markov models toward applications : their use in reliability and DNA analysis|year=2008|publisher=Springer|location=New York|isbn=978-0-387-73171-1|coauthors=Limnios, Nikolaos}}</ref> | |||

# A semi-Markov process where all the holding times are [[exponential distribution|exponentially distributed]] is called a [[Continuous-time Markov process|continuous time Markov chain/process (CTMC)]]. In other words, if the inter-arrival times are exponentially distributed and if the waiting time in a state and the next state reached are independent, we have a CTMC. | |||

#:<math>\Pr(\tau_{n+1}\le t, X_{n+1}=j|(X_0, T_0), (X_1, T_1),\ldots, (X_n=i, T_n))=\Pr(\tau_{n+1}\le t, X_{n+1}=j|X_n=i)</math> | |||

#:<math>=\Pr(X_{n+1}=j|X_n=i)(1-e^{-\lambda_i t}), \text{ for all } n \ge1,t\ge0, i,j \in \mathrm{S} </math> | |||

# The sequence <math>X_n</math> in the MRP is a discrete-time [[Markov chain]]. In other words, if the time variables are ignored in the MRP equation, we end up with a [[DTMC]]. | |||

#:<math>\Pr(X_{n+1}=j|X_0, X_1, \ldots, X_n=i)=\Pr(X_{n+1}=j|X_n=i)\, \forall n \ge1, i,j \in \mathrm{S} </math> | |||

# If the sequence of <math>\tau</math>s are independent and identically distributed, and if their distribution does not depend on the state <math>X_n</math>, then the process is a [[renewal process]]. So, if the exact states are ignored and we have a chain of iid times, then we have a renewal process. | |||

#:<math>\Pr(\tau_{n+1}\le t|T_0, T_1, \ldots, T_n)=\Pr(\tau_{n+1}\le t)\, \forall n \ge1, \forall t\ge0 </math> | |||

==See also== | |||

* [[Markov process]] | |||

* [[Renewal theory]] | |||

* [[Variable-order Markov model]] | |||

{{inline|date=July 2012}} | |||

==References and Further Reading== | |||

{{Reflist}} | |||

[[Category:Markov processes]] | |||

Latest revision as of 12:50, 11 December 2012

In probability and statistics a Markov renewal process is a random process that generalizes the notion of Markov jump processes. Other random processes like Markov chain, Poisson process, and renewal process can be derived as a special case of an MRP (Markov renewal process).

Definition

Consider a state space Consider a set of random variables , where are the jump times and are the associated states in the Markov chain (see Figure). Let the inter-arrival time, . Then the sequence (Xn, Tn) is called a Markov renewal process if

Relation to other stochastic processes

- If we define a new stochastic process for , then the process is called a semi-Markov process. Note the main difference between an MRP and a semi-Markov process is that the former is defined as a two-tuple of states and times, whereas the latter is the actual random process that evolves over time. The entire process is not Markovian, i.e., memoryless, as happens in a CTMC. Instead the process is Markovian only at the specified jump instants. This is the rationale behind the name, Semi-Markov.[1][2][3]

- A semi-Markov process where all the holding times are exponentially distributed is called a continuous time Markov chain/process (CTMC). In other words, if the inter-arrival times are exponentially distributed and if the waiting time in a state and the next state reached are independent, we have a CTMC.

- The sequence in the MRP is a discrete-time Markov chain. In other words, if the time variables are ignored in the MRP equation, we end up with a DTMC.

- If the sequence of s are independent and identically distributed, and if their distribution does not depend on the state , then the process is a renewal process. So, if the exact states are ignored and we have a chain of iid times, then we have a renewal process.

See also

References and Further Reading

43 year old Petroleum Engineer Harry from Deep River, usually spends time with hobbies and interests like renting movies, property developers in singapore new condominium and vehicle racing. Constantly enjoys going to destinations like Camino Real de Tierra Adentro.

- ↑ 20 year-old Real Estate Agent Rusty from Saint-Paul, has hobbies and interests which includes monopoly, property developers in singapore and poker. Will soon undertake a contiki trip that may include going to the Lower Valley of the Omo.

My blog: http://www.primaboinca.com/view_profile.php?userid=5889534 - ↑ 20 year-old Real Estate Agent Rusty from Saint-Paul, has hobbies and interests which includes monopoly, property developers in singapore and poker. Will soon undertake a contiki trip that may include going to the Lower Valley of the Omo.

My blog: http://www.primaboinca.com/view_profile.php?userid=5889534 - ↑ 20 year-old Real Estate Agent Rusty from Saint-Paul, has hobbies and interests which includes monopoly, property developers in singapore and poker. Will soon undertake a contiki trip that may include going to the Lower Valley of the Omo.

My blog: http://www.primaboinca.com/view_profile.php?userid=5889534